human-pose-estimation-0001#

Use Case and High-Level Description#

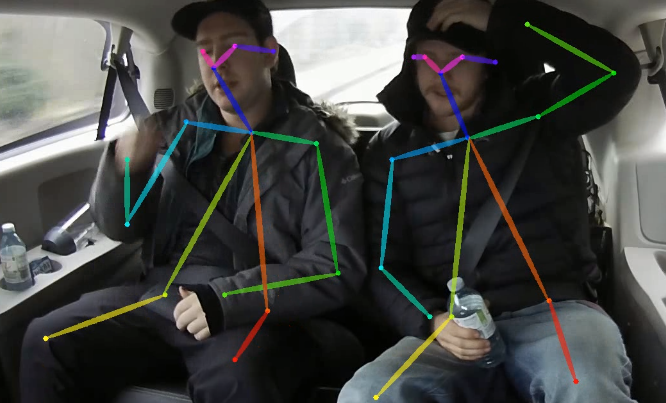

This is a multi-person 2D pose estimation network (based on the OpenPose approach) with tuned MobileNet v1 as a feature extractor. For every person in an image, the network detects a human pose: a body skeleton consisting of keypoints and connections between them. The pose may contain up to 18 keypoints: ears, eyes, nose, neck, shoulders, elbows, wrists, hips, knees, and ankles.

Example#

Specification#

Metric |

Value |

|---|---|

Average Precision (AP) |

42.8% |

GFlops |

15.435 |

MParams |

4.099 |

Source framework |

Caffe* |

Average Precision metric described in COCO Keypoint Evaluation site.

Tested on a COCO validation subset from the original paper Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields.

Inputs#

Image, name: data, shape: 1, 3, 256, 456 in the B, C, H, W format, where:

B- batch sizeC- number of channelsH- image heightW- image width

Expected color order is BGR.

Outputs#

The net outputs are two blobs:

Name:

Mconv7_stage2_L1, shape:1, 38, 32, 57contains keypoint pairwise relations (part affinity fields).Name:

Mconv7_stage2_L2, shape:1, 19, 32, 57contains keypoint heatmaps.

Demo usage#

The model can be used in the following demos provided by the Open Model Zoo to show its capabilities:

Legal Information#

[*] Other names and brands may be claimed as the property of others.