Image Colorization with OpenVINO#

This Jupyter notebook can be launched on-line, opening an interactive environment in a browser window. You can also make a local installation. Choose one of the following options:

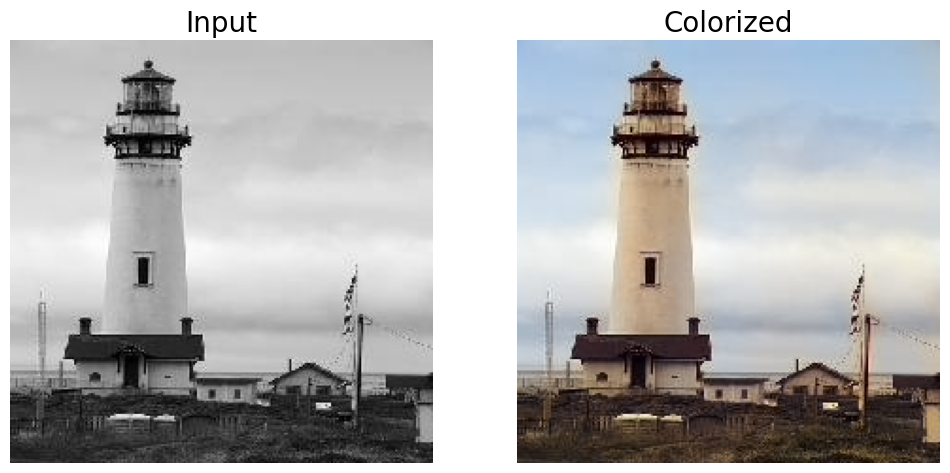

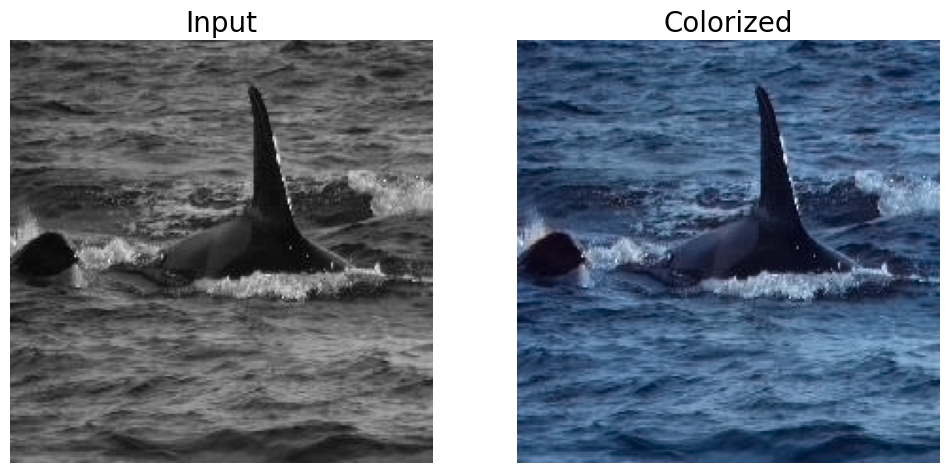

This notebook demonstrates how to colorize images with OpenVINO using the Colorization model colorization-v2 or colorization-siggraph from Open Model Zoo based on the paper Colorful Image Colorization models from Open Model Zoo.

Let there be color#

Given a grayscale image as input, the model generates colorized version of the image as the output.

About Colorization-v2#

The colorization-v2 model is one of the colorization group of models designed to perform image colorization.

Model trained on the ImageNet dataset.

Model consumes L-channel of LAB-image as input and produces predict A- and B-channels of LAB-image as output.

About Colorization-siggraph#

The colorization-siggraph model is one of the colorization group of models designed to real-time user-guided image colorization.

Model trained on the ImageNet dataset with synthetically generated user interaction.

Model consumes L-channel of LAB-image as input and produces predict A- and B-channels of LAB-image as output.

See the colorization repository for more details.

Table of contents:#

import platform

%pip install "openvino-dev>=2024.0.0" opencv-python tqdm

if platform.system() != "Windows":

%pip install -q "matplotlib>=3.4"

else:

%pip install -q "matplotlib>=3.4,<3.7"

Requirement already satisfied: openvino-dev>=2024.0.0 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (2024.1.0) Requirement already satisfied: opencv-python in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (4.9.0.80) Requirement already satisfied: tqdm in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (4.66.4) Requirement already satisfied: defusedxml>=0.7.1 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (0.7.1) Requirement already satisfied: networkx<=3.1.0 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (3.1) Requirement already satisfied: numpy<2.0.0,>=1.16.6 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (1.23.5) Requirement already satisfied: openvino-telemetry>=2023.2.1 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (2024.1.0) Requirement already satisfied: packaging in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (24.0) Requirement already satisfied: pyyaml>=5.4.1 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (6.0.1) Requirement already satisfied: requests>=2.25.1 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (2.31.0) Requirement already satisfied: openvino==2024.1.0 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from openvino-dev>=2024.0.0) (2024.1.0) Requirement already satisfied: charset-normalizer<4,>=2 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from requests>=2.25.1->openvino-dev>=2024.0.0) (3.3.2) Requirement already satisfied: idna<4,>=2.5 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from requests>=2.25.1->openvino-dev>=2024.0.0) (3.7) Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from requests>=2.25.1->openvino-dev>=2024.0.0) (2.2.1) Requirement already satisfied: certifi>=2017.4.17 in /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages (from requests>=2.25.1->openvino-dev>=2024.0.0) (2024.2.2) DEPRECATION: pytorch-lightning 1.6.5 has a non-standard dependency specifier torch>=1.8.*. pip 24.1 will enforce this behaviour change. A possible replacement is to upgrade to a newer version of pytorch-lightning or contact the author to suggest that they release a version with a conforming dependency specifiers. Discussion can be found at pypa/pip#12063 Note: you may need to restart the kernel to use updated packages. DEPRECATION: pytorch-lightning 1.6.5 has a non-standard dependency specifier torch>=1.8.*. pip 24.1 will enforce this behaviour change. A possible replacement is to upgrade to a newer version of pytorch-lightning or contact the author to suggest that they release a version with a conforming dependency specifiers. Discussion can be found at pypa/pip#12063 Note: you may need to restart the kernel to use updated packages.

Imports#

import os

from pathlib import Path

import cv2

import matplotlib.pyplot as plt

import numpy as np

import openvino as ov

# Fetch `notebook_utils` module

import requests

r = requests.get(

url="https://raw.githubusercontent.com/openvinotoolkit/openvino_notebooks/latest/utils/notebook_utils.py",

)

open("notebook_utils.py", "w").write(r.text)

import notebook_utils as utils

Configurations#

PRECISION- {FP16, FP32}, default: FP16.MODEL_DIR- directory where the model is to be stored, default: public.MODEL_NAME- name of the model used for inference, default: colorization-v2.DATA_DIR- directory where test images are stored, default: data.

PRECISION = "FP16"

MODEL_DIR = "models"

MODEL_NAME = "colorization-v2"

# MODEL_NAME="colorization-siggraph"

MODEL_PATH = f"{MODEL_DIR}/public/{MODEL_NAME}/{PRECISION}/{MODEL_NAME}.xml"

DATA_DIR = "data"

Select inference device#

select device from dropdown list for running inference using OpenVINO

import ipywidgets as widgets

core = ov.Core()

device = widgets.Dropdown(

options=core.available_devices + ["AUTO"],

value="AUTO",

description="Device:",

disabled=False,

)

device

Dropdown(description='Device:', index=1, options=('CPU', 'AUTO'), value='AUTO')

omz_downloader downloads model files from online sources and, if

necessary, patches them to make them more usable with Model Converter.

In this case, omz_downloader downloads the checkpoint and pytorch

model of

colorization-v2

or

colorization-siggraph

from Open Model

Zoo

and saves it under MODEL_DIR, as specified in the configuration

above.

download_command = f"omz_downloader " f"--name {MODEL_NAME} " f"--output_dir {MODEL_DIR} " f"--cache_dir {MODEL_DIR}"

! $download_command

################|| Downloading colorization-v2 ||################

========== Downloading models/public/colorization-v2/ckpt/colorization-v2-eccv16.pth

========== Downloading models/public/colorization-v2/model/__init__.py

========== Downloading models/public/colorization-v2/model/base_color.py

========== Downloading models/public/colorization-v2/model/eccv16.py

========== Replacing text in models/public/colorization-v2/model/__init__.py

========== Replacing text in models/public/colorization-v2/model/__init__.py

========== Replacing text in models/public/colorization-v2/model/eccv16.py

omz_converter converts the models that are not in the OpenVINO™ IR

format into that format using model conversion API.

The downloaded pytorch model is not in OpenVINO IR format which is

required for inference with OpenVINO runtime. omz_converter is used

to convert the downloaded pytorch model into ONNX and OpenVINO IR format

respectively

if not os.path.exists(MODEL_PATH):

convert_command = f"omz_converter " f"--name {MODEL_NAME} " f"--download_dir {MODEL_DIR} " f"--precisions {PRECISION}"

! $convert_command

========== Converting colorization-v2 to ONNX

Conversion to ONNX command: /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/bin/python -- /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/lib/python3.8/site-packages/omz_tools/internal_scripts/pytorch_to_onnx.py --model-path=models/public/colorization-v2 --model-name=ECCVGenerator --weights=models/public/colorization-v2/ckpt/colorization-v2-eccv16.pth --import-module=model --input-shape=1,1,256,256 --output-file=models/public/colorization-v2/colorization-v2-eccv16.onnx --input-names=data_l --output-names=color_ab

ONNX check passed successfully.

========== Converting colorization-v2 to IR (FP16)

Conversion command: /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/bin/python -- /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/.venv/bin/mo --framework=onnx --output_dir=models/public/colorization-v2/FP16 --model_name=colorization-v2 --input=data_l --output=color_ab --input_model=models/public/colorization-v2/colorization-v2-eccv16.onnx '--layout=data_l(NCHW)' '--input_shape=[1, 1, 256, 256]' --compress_to_fp16=True

[ INFO ] Generated IR will be compressed to FP16. If you get lower accuracy, please consider disabling compression explicitly by adding argument --compress_to_fp16=False.

Find more information about compression to FP16 at https://docs.openvino.ai/2023.0/openvino_docs_MO_DG_FP16_Compression.html

[ INFO ] MO command line tool is considered as the legacy conversion API as of OpenVINO 2023.2 release. Please use OpenVINO Model Converter (OVC). OVC represents a lightweight alternative of MO and provides simplified model conversion API.

Find more information about transition from MO to OVC at https://docs.openvino.ai/2023.2/openvino_docs_OV_Converter_UG_prepare_model_convert_model_MO_OVC_transition.html

[ SUCCESS ] Generated IR version 11 model.

[ SUCCESS ] XML file: /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/notebooks/vision-image-colorization/models/public/colorization-v2/FP16/colorization-v2.xml

[ SUCCESS ] BIN file: /opt/home/k8sworker/ci-ai/cibuilds/ov-notebook/OVNotebookOps-674/.workspace/scm/ov-notebook/notebooks/vision-image-colorization/models/public/colorization-v2/FP16/colorization-v2.bin

Load the model in OpenVINO Runtime with ie.read_model and compile it

for the specified device with ie.compile_model.

core = ov.Core()

model = core.read_model(model=MODEL_PATH)

compiled_model = core.compile_model(model=model, device_name=device.value)

input_layer = compiled_model.input(0)

output_layer = compiled_model.output(0)

N, C, H, W = list(input_layer.shape)

def read_image(impath: str) -> np.ndarray:

"""

Returns an image as ndarra, given path to an image reads the

(BGR) image using opencv's imread() API.

Parameter:

impath (string): Path of the image to be read and returned.

Returns:

image (ndarray): Numpy array representing the read image.

"""

raw_image = cv2.imread(impath)

if raw_image.shape[2] > 1:

image = cv2.cvtColor(cv2.cvtColor(raw_image, cv2.COLOR_BGR2GRAY), cv2.COLOR_GRAY2RGB)

else:

image = cv2.cvtColor(image, cv2.COLOR_GRAY2RGB)

return image

def plot_image(image: np.ndarray, title: str = "") -> None:

"""

Given a image as ndarray and title as string, display it using

matplotlib.

Parameters:

image (ndarray): Numpy array representing the image to be

displayed.

title (string): String representing the title of the plot.

Returns:

None

"""

plt.imshow(image)

plt.title(title)

plt.axis("off")

plt.show()

def plot_output(gray_img: np.ndarray, color_img: np.ndarray) -> None:

"""

Plots the original (bw or grayscale) image and colorized image

on different column axes for comparing side by side.

Parameters:

gray_image (ndarray): Numpy array representing the original image.

color_image (ndarray): Numpy array representing the model output.

Returns:

None

"""

fig = plt.figure(figsize=(12, 12))

ax1 = fig.add_subplot(1, 2, 1)

plt.title("Input", fontsize=20)

ax1.axis("off")

ax2 = fig.add_subplot(1, 2, 2)

plt.title("Colorized", fontsize=20)

ax2.axis("off")

ax1.imshow(gray_img)

ax2.imshow(color_img)

plt.show()

img_url_0 = "https://user-images.githubusercontent.com/18904157/180923287-20339d01-b1bf-493f-9a0d-55eff997aff1.jpg"

img_url_1 = "https://user-images.githubusercontent.com/18904157/180923289-0bb71e09-25e1-46a6-aaf1-e8f666b62d26.jpg"

image_file_0 = utils.download_file(

img_url_0,

filename="test_0.jpg",

directory="data",

show_progress=False,

silent=True,

timeout=30,

)

assert Path(image_file_0).exists()

image_file_1 = utils.download_file(

img_url_1,

filename="test_1.jpg",

directory="data",

show_progress=False,

silent=True,

timeout=30,

)

assert Path(image_file_1).exists()

test_img_0 = read_image("data/test_0.jpg")

test_img_1 = read_image("data/test_1.jpg")

def colorize(gray_img: np.ndarray) -> np.ndarray:

"""

Given an image as ndarray for inference convert the image into LAB image,

the model consumes as input L-Channel of LAB image and provides output

A & B - Channels of LAB image. i.e returns a colorized image

Parameters:

gray_img (ndarray): Numpy array representing the original

image.

Returns:

colorize_image (ndarray): Numpy arrray depicting the

colorized version of the original

image.

"""

# Preprocess

h_in, w_in, _ = gray_img.shape

img_rgb = gray_img.astype(np.float32) / 255

img_lab = cv2.cvtColor(img_rgb, cv2.COLOR_RGB2Lab)

img_l_rs = cv2.resize(img_lab.copy(), (W, H))[:, :, 0]

# Inference

inputs = np.expand_dims(img_l_rs, axis=[0, 1])

res = compiled_model([inputs])[output_layer]

update_res = np.squeeze(res)

# Post-process

out = update_res.transpose((1, 2, 0))

out = cv2.resize(out, (w_in, h_in))

img_lab_out = np.concatenate((img_lab[:, :, 0][:, :, np.newaxis], out), axis=2)

img_bgr_out = np.clip(cv2.cvtColor(img_lab_out, cv2.COLOR_Lab2RGB), 0, 1)

colorized_image = (cv2.resize(img_bgr_out, (w_in, h_in)) * 255).astype(np.uint8)

return colorized_image

color_img_0 = colorize(test_img_0)

color_img_1 = colorize(test_img_1)

plot_output(test_img_0, color_img_0)

plot_output(test_img_1, color_img_1)