OpenAI API image edit endpoint#

API Reference#

OpenVINO Model Server includes now the images/edits endpoint using OpenAI API.

It is used to execute image2image and inpainting tasks with OpenVINO GenAI pipelines.

Please see the OpenAI API Reference for more information on the API.

The endpoint is exposed via a path:

http://server_name:port/v3/images/edits

Request body must be in multipart/form-data format.

Example request#

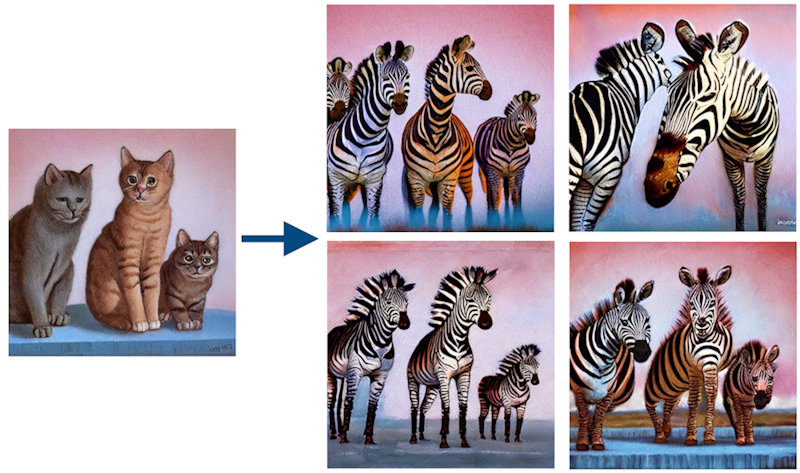

curl -X POST http://localhost:8000/v3/images/edits \

-F "model=OpenVINO/stable-diffusion-v1-5-fp16-ov" \

-F "image=@three_cats.png" \

-F "strength=0.7" \

-F 'prompt=Three zebras' \

| jq -r '.data[0].b64_json' | base64 --decode > edit.png

Generate 4 variations of the edit#

curl -X POST http://localhost:8000/v3/images/edits \

-F "model=OpenVINO/stable-diffusion-v1-5-fp16-ov" \

-F "image=@three_cats.png" \

-F "strength=0.7" \

-F "prompt=Three zebras" \

-F "n=4" \

| jq -r '.data[].b64_json' \

| awk '{print > ("img" NR ".b64") } END { for(i=1;i<=NR;i++) system("base64 --decode img" i ".b64 > edit" i ".png && rm img" i ".b64") }'

Example response#

{

"data": [

{

"b64_json": "..."

}

]

}

Request#

Param |

OpenVINO Model Server |

OpenAI /images/edits API |

Type |

Description |

|---|---|---|---|---|

model |

✅ |

✅ |

string (required) |

Name of the model to use. Name assigned to a MediaPipe graph configured to schedule generation using desired embedding model. Note: This can also be omitted to fall back to URI based routing. Read more on routing topic TODO |

image |

⚠️ |

✅ |

string or array of strings (required) |

The image to edit. Must be a single image (⚠️Note: Array of strings is not supported for now.) |

mask |

❌ |

✅ |

string |

Triggers inpainting pipeline type. An additional image whose fully transparent areas (e.g. where alpha is zero) indicate where |

prompt |

✅ |

✅ |

string (required) |

A text description of the desired image(s). |

size |

✅ |

✅ |

string or null (default: auto) |

The size of the generated images. Must be in WxH format, example: |

n |

✅ |

✅ |

integer or null (default: |

A number of images to generate. If you want to generate multiple images for the same combination of generation parameters and text prompts, you can use this parameter for better performance as internally computations will be performed with batch for Unet / Transformer models and text embeddings tensors will also be computed only once. |

input_fidelity |

⚠️ |

✅ |

string or null |

Control how much effort the model will exert to match the style and features, especially facial features, of input images. (⚠️Note: Not supported for now - use |

background |

❌ |

✅ |

string or null (default: auto) |

Allows to set transparency for the background of the generated image(s). Not supported for now. |

stream |

❌ |

✅ |

boolean or null (default: false) |

Generate the image in streaming mode. Not supported for now. |

style |

❌ |

✅ |

string or null (default: vivid) |

The style of the generated images. Recognized OpenAI settings, but not supported: vivid, natural. |

moderation |

❌ |

✅ |

string (default: auto) |

Control the content-moderation level for images generated by endpoint. Either |

output_compression |

❌ |

✅ |

integer or null (default: |

The compression level (0-100%) for the generated images. Not supported for now. |

output_format |

❌ |

✅ |

string or null |

The format in which the generated images are returned. Not supported for now. |

partial_images |

❌ |

✅ |

integer or null |

The number of partial images to generate. This parameter is used for streaming responses that return partial images. Value must be between 0 and 3. When set to 0, the response will be a single image sent in one streaming event. Not supported for now. |

quality |

❌ |

✅ |

string or null (default: auto) |

Quality of the image that will be generated. Recognized OpenAI qualities, but currently not supported: auto, high, medium, low, hd, standard |

response_format |

⚠️ |

✅ |

string or null (default: b64_json) |

The format of the images in output. Recognized options: b64_json or url. Only b64_json is supported for now (default). |

user |

❌ |

✅ |

string (optional) |

A unique identifier representing your end-user that allows to detect abuse. Not supported for now. |

Parameters supported via extra_body field#

Param |

OpenVINO Model Server |

OpenAI /images/generations API |

Type |

Description |

|---|---|---|---|---|

prompt_2 |

✅ |

❌ |

string (optional) |

Prompt 2 for models which have at least two text encoders (SDXL/SD3/FLUX). |

prompt_3 |

✅ |

❌ |

string (optional) |

Prompt 3 for models which have at least three text encoders (SD3). |

negative_prompt |

✅ |

❌ |

string (optional) |

Negative prompt for models which support negative prompt (SD/SDXL/SD3). |

negative_prompt_2 |

✅ |

❌ |

string (optional) |

Negative prompt 2 for models which support negative prompt (SDXL/SD3). |

negative_prompt_3 |

✅ |

❌ |

string (optional) |

Negative prompt 3 for models which support negative prompt (SD3). |

num_images_per_prompt |

✅ |

❌ |

integer (default: |

The same as base parameter |

num_inference_steps |

✅ |

❌ |

integer (default: |

Defines denoising iteration count. Higher values increase quality and generation time, lower values generate faster with less detail. |

guidance_scale |

✅ |

❌ |

float (optional) |

Guidance scale parameter which controls how model sticks to text embeddings generated by text encoders within a pipeline. Higher value of guidance scale moves image generation towards text embeddings, but resulting image will be less natural and more augmented. |

strength |

✅ |

❌ |

float (optional) min: 0.0, max: 1.0 |

Indicates extent to transform the reference |

rng_seed |

✅ |

❌ |

integer (optional) |

Seed for random generator. |

max_sequence_length |

✅ |

❌ |

integer (optional) |

This parameters limits max sequence length for T5 encoder for SD3 and FLUX models. T5 tokenizer output is padded with pad tokens to ‘max_sequence_length’ within a pipeline. So, for better performance, you can specify this parameter to lower value to speed-up T5 encoder inference as well as inference of transformer denoising model. For optimal performance it can be set to a number of tokens for |

Response#

Param |

OpenVINO Model Server |

OpenAI /images/generations API |

Type |

Description |

|---|---|---|---|---|

data |

✅ |

✅ |

array |

A list of generated images. |

data.b64_json |

✅ |

✅ |

string |

The base64-encoded JSON of the generated image. |

data.url |

❌ |

✅ |

string |

The URL of the generated image if |

data.revised_prompt |

❌ |

✅ |

string |

The revised prompt that was used to generate the image. Unsupported for now. |

usage |

❌ |

✅ |

dictionary |

Info about assessed tokens. Unsupported for now. |

created |

❌ |

✅ |

string |

The Unix timestamp (in seconds) of when the image was created. Unsupported for now. |

Currently unsupported endpoints:#

images/variations

Error handling#

Endpoint can raise an error related to incorrect request in the following conditions:

Incorrect format of any of the fields based on the schema

Tokenized prompt exceeds the maximum length of the model context.

Model does not support requested width and height

Administrator defined min/max parameter value requirements are not met