Visual Studio Code Local Assistant#

Intro#

With the rise of AI PC capabilities, hosting own Visual Studio code assistant is at your reach. In this demo, we will showcase how to deploy local LLM serving with OVMS and integrate it with Continue extension. It will employ GPU acceleration.

Requirements#

Windows (for standalone app) or Linux (using Docker)

Python installed (for model preparation only)

Intel Meteor Lake, Lunar Lake, Arrow Lake or newer Intel CPU.

Prepare Code Chat/Edit Model#

We need to use medium size model to get reliable responses but also to fit it to the available memory on the host or discrete GPU.

Download export script, install its dependencies and create directory for the models:

curl https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/demos/common/export_models/export_model.py -o export_model.py

pip3 install -r https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/demos/common/export_models/requirements.txt

mkdir models

Note: The users in China need to set environment variable HF_ENDPOINT=”https://hf-mirror.com” before running the export script to connect to the HF Hub.

Pull and add the model on Linux:

python export_model.py text_generation --source_model Qwen/Qwen3-Coder-30B-A3B-Instruct --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --target_device GPU --tool_parser qwen3coder

curl -L -o models/Qwen/Qwen3-Coder-30B-A3B-Instruct/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_qwen3coder_instruct.jinja

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config \

--config_path /models/config_all.json \

--model_name Qwen/Qwen3-Coder-30B-A3B-Instruct \

--model_path Qwen/Qwen3-Coder-30B-A3B-Instruct

Note: For deployment, the model requires ~16GB disk space and recommended 19GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 65GB of free RAM.

python export_model.py text_generation --source_model mistralai/Codestral-22B-v0.1 --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --target_device GPU

curl -L -o models/mistralai/Codestral-22B-v0.1/chat_template.jinja https://raw.githubusercontent.com/vllm-project/vllm/refs/tags/v0.10.1.1/examples/tool_chat_template_mistral_parallel.jinja

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config \

--config_path /models/config_all.json \

--model_name mistralai/Codestral-22B-v0.1 \

--model_path mistralai/Codestral-22B-v0.1

Note: For deployment, the model requires ~12GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 50GB of free RAM.

python export_model.py text_generation --source_model openai/gpt-oss-20b --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --tool_parser gptoss --reasoning_parser gptoss --target_device GPU

curl -L -o models/openai/gpt-oss-20b/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_gpt_oss.jinja

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config \

--config_path /models/config_all.json \

--model_name openai/gpt-oss-20b \

--model_path openai/gpt-oss-20b

Note: For deployment, the model requires ~12GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 45GB of free RAM.

python export_model.py text_generation --source_model unsloth/Devstral-Small-2507 --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --tool_parser devstral --target_device GPU

curl -L -o models/unsloth/Devstral-Small-2507/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_devstral.jinja

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config \

--config_path /models/config_all.json \

--model_name unsloth/Devstral-Small-2507 \

--model_path unsloth/Devstral-Small-2507

Note: For deployment, the model requires ~13GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 50GB of free RAM.

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--pull \

--source_model OpenVINO/Qwen3-4B-int4-ov \

--model_repository_path /models \

--model_name OpenVINO/Qwen3-4B-int4-ov \

--task text_generation \

--tool_parser hermes3 \

--target_device GPU

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config --config_path /models/config_all.json \

--model_name OpenVINO/Qwen3-4B-int4-ov \

--model_path OpenVINO/Qwen3-4B-int4-ov

Note:

Qwen3models are available on HuggingFace OpenVINO repository in different sizes and precisions. It is possible to choose it for any use and hardware. RAM requirements depends on the model quantization.

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--pull \

--source_model OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov \

--model_repository_path /models \

--model_name OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov \

--task text_generation \

--target_device GPU

docker run -d --rm --user $(id -u):$(id -g) -v $(pwd)/models:/models/:rw \

openvino/model_server:weekly \

--add_to_config \

--config_path /models/config_all.json \

--model_name OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov \

--model_path OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov

Note:

Qwen2.5-Codermodels are available on HuggingFace OpenVINO repository in different sizes and precisions. It is possible to choose it for any use and hardware. RAM requirements depends on the model quantization.

Pull and add the model on Windows:

python export_model.py text_generation --source_model Qwen/Qwen3-Coder-30B-A3B-Instruct --weight-format int8 --config_file_path models/config_all.json --model_repository_path models --target_device GPU --tool_parser qwen3coder

curl -L -o models/Qwen/Qwen3-Coder-30B-A3B-Instruct/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_qwen3coder_instruct.jinja

ovms.exe --add_to_config --config_path models/config_all.json --model_name Qwen/Qwen3-Coder-30B-A3B-Instruct --model_path Qwen/Qwen3-Coder-30B-A3B-Instruct

Note: For deployment, the model requires ~16GB disk space and recommended 19GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 65GB of free RAM.

python export_model.py text_generation --source_model mistralai/Codestral-22B-v0.1 --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --target_device GPU

curl -L -o models/mistralai/Codestral-22B-v0.1/chat_template.jinja https://raw.githubusercontent.com/vllm-project/vllm/refs/tags/v0.10.1.1/examples/tool_chat_template_mistral_parallel.jinja

ovms.exe --add_to_config --config_path models/config_all.json --model_name mistralai/Codestral-22B-v0.1 --model_path mistralai/Codestral-22B-v0.1

Note: For deployment, the model requires ~12GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 50GB of free RAM.

python export_model.py text_generation --source_model openai/gpt-oss-20b --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --target_device GPU --tool_parser gptoss --reasoning_parser gptoss

curl -L -o models/openai/gpt-oss-20b/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_gpt_oss.jinja

ovms.exe --add_to_config --config_path models/config_all.json --model_name openai/gpt-oss-20b --model_path openai/gpt-oss-20b

Note: For deployment, the model requires ~12GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 45GB of free RAM. Note:: Use

--pipeline_type LMparameter in export command, for version 2025.4.1 or older. It disables continuous batching and CPU support in weekly or 2026.0+ releases.

python export_model.py text_generation --source_model unsloth/Devstral-Small-2507 --weight-format int4 --config_file_path models/config_all.json --model_repository_path models --tool_parser devstral --target_device GPU

curl -L -o models/unsloth/Devstral-Small-2507/chat_template.jinja https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/extras/chat_template_examples/chat_template_devstral.jinja

ovms.exe --add_to_config --config_path models/config_all.json --model_name unsloth/Devstral-Small-2507 --model_path unsloth/Devstral-Small-2507

Note: For deployment, the model requires ~13GB disk space and recommended 16GB+ of VRAM on the GPU. For conversion, the original model will be pulled and quantized, which requires 50GB of free RAM.

ovms.exe --pull --source_model OpenVINO/Qwen3-4B-int4-ov --model_repository_path models --model_name OpenVINO/Qwen3-4B-int4-ov --target_device GPU --task text_generation --tool_parser hermes3

ovms.exe --add_to_config --config_path models/config_all.json --model_name OpenVINO/Qwen3-4B-int4-ov --model_path OpenVINO/Qwen3-4B-int4-ov

Note:

Qwen3models are available on HuggingFace OpenVINO repository in different sizes and precisions. It is possible to choose it for any use and hardware.

ovms.exe --pull --source_model OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov --model_repository_path models --model_name OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov --target_device GPU --task text_generation

ovms.exe --add_to_config --config_path models/config_all.json --model_name OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov --model_path OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov

Note:

Qwen2.5-Codermodels are available on HuggingFace OpenVINO repository in different sizes and precisions. It is possible to choose it for any use and hardware.

Set Up Server#

Run OpenVINO Model Server with all downloaded models loaded at the same time:

Windows: deploying on bare metal

Please refer to OpenVINO Model Server installation first: link

set MOE_USE_MICRO_GEMM_PREFILL=0

ovms --rest_port 8000 --config_path ./models/config_all.json

Linux: via Docker with CPU

docker run -d --rm -u $(id -u):$(id -g) -e MOE_USE_MICRO_GEMM_PREFILL=0 \

-p 8000:8000 -v $(pwd)/:/workspace/ openvino/model_server:weekly --rest_port 8000 --config_path /workspace/models/config_all.json

Linux: via Docker with GPU

docker run -d --rm --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) -u $(id -u):$(id -g) -e MOE_USE_MICRO_GEMM_PREFILL=0 \

-p 8000:8000 -v $(pwd)/:/workspace/ openvino/model_server:weekly --rest_port 8000 --config_path /workspace/models/config_all.json

Note:

MOE_USE_MICRO_GEMM_PREFILL=0is a workaround for Qwen3-Coder-30B-A3B-Instruct and it will be fixed in release 2026.1 or next weekly.

Set Up Visual Studio Code#

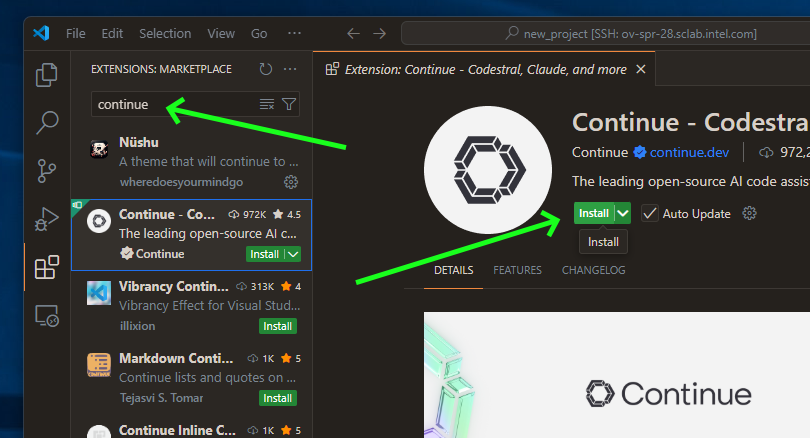

Download Continue plugin#

Note: This demo has been tested with Continue plugin version

1.2.11. While newer versions should work, some configuration options may vary.

Setup Local Assistant#

We need to point Continue plugin to our OpenVINO Model Server instance. Open configuration file:

Prepare a config:

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS Qwen/Qwen3-Coder-30B-A3B

provider: openai

model: Qwen/Qwen3-Coder-30B-A3B-Instruct

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

- autocomplete

capabilities:

- tool_use

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

modelTimeout: 400

onlyMyCode: true

useCache: true

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS mistralai/Codestral-22B-v0.1

provider: openai

model: mistralai/Codestral-22B-v0.1

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

- autocomplete

capabilities:

- tool_use

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

useCache: true

onlyMyCode: true

modelTimeout: 400

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS openai/gpt-oss-20b

provider: openai

model: openai/gpt-oss-20b

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

capabilities:

- tool_use

- name: OVMS openai/gpt-oss-20b autocomplete

provider: openai

model: openai/gpt-oss-20b

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- autocomplete

capabilities:

- tool_use

requestOptions:

extraBodyProperties:

reasoning_effort:

none

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

useCache: true

onlyMyCode: true

modelTimeout: 400

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS unsloth/Devstral-Small-2507

provider: openai

model: unsloth/Devstral-Small-2507

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

- autocomplete

capabilities:

- tool_use

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

useCache: true

onlyMyCode: true

modelTimeout: 400

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS OpenVINO/Qwen3-4B

provider: openai

model: OpenVINO/Qwen3-4B-int4-ov

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

- autocomplete

capabilities:

- tool_use

requestOptions:

extraBodyProperties:

chat_template_kwargs:

enable_thinking: false

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

useCache: true

onlyMyCode: true

modelTimeout: 400

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OVMS OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov

provider: openai

model: OpenVINO/Qwen2.5-Coder-3B-Instruct-int4-ov

apiKey: unused

apiBase: http://localhost:8000/v3

roles:

- chat

- edit

- apply

- autocomplete

capabilities:

- tool_use

autocompleteOptions:

maxPromptTokens: 500

debounceDelay: 124

useCache: true

onlyMyCode: true

modelTimeout: 400

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase

Note: For more information about this config, see configuration reference.

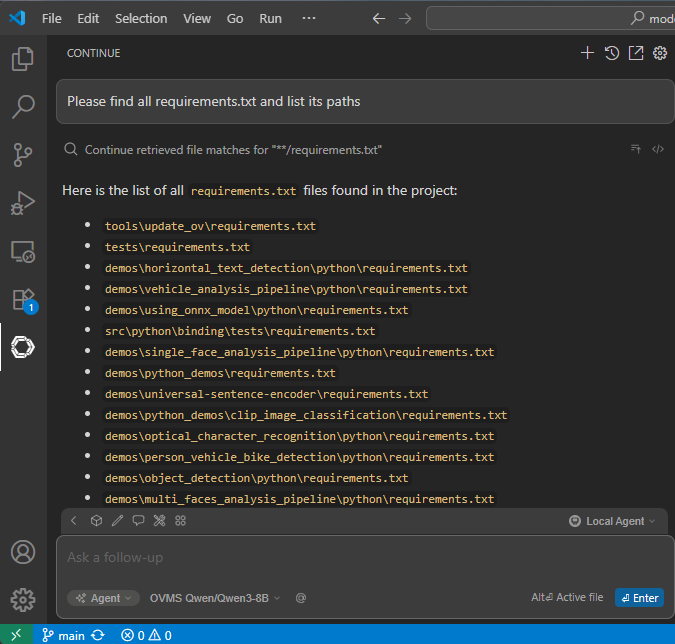

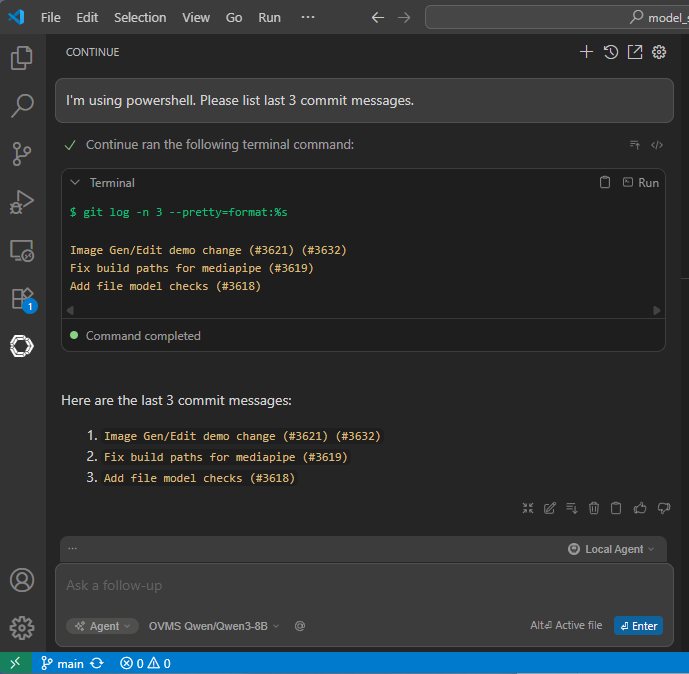

Chatting, code editing and autocompletion in action#

to use chatting feature click continue button on the left sidebar

use

CTRL+Ito select and include source in chat messageuse

CTRL+Lto select and edit the source via chat requestsimply write code to see code autocompletion (NOTE: this is turned off by default)

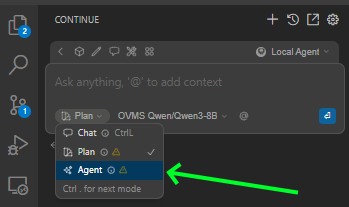

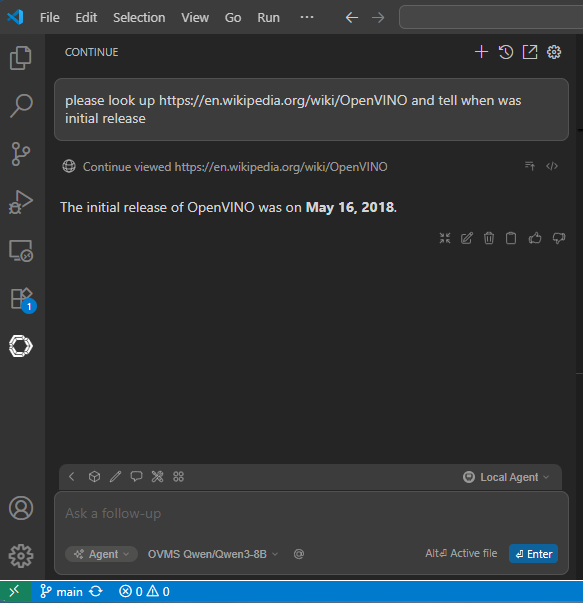

AI Agents in action#

Continue.dev plugin is shipped with multiple built-in tools. For full list please visit Continue documentation.

To use them, select Agent Mode:

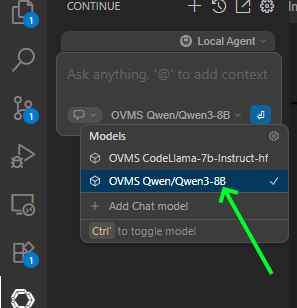

Select model that support tool calling from model list:

Example use cases for tools:

Run terminal commands

Look up web links

Search files