Image Generation with OpenAI API#

This demo shows how to deploy image generation models (Stable Diffusion/Stable Diffusion 3/Stable Diffusion XL/FLUX) to create and edit images with the OpenVINO Model Server.

Image generation pipelines are exposed via OpenAI API images/generations and images/edits endpoints.

Check supported models.

Note: Please note that FLUX models are not supported on NPU.

Note: This demo was tested on Intel® Xeon®, Intel® Core®, Intel® Arc™ A770, Intel® Arc™ B580 on Ubuntu 22/24, RedHat 9 and Windows 11.

Prerequisites#

RAM/vRAM Select model size and precision according to your hardware capabilities (RAM/vRAM). Request resolution plays significant role in memory consumption, so the higher resolution you request, the more RAM/vRAM is required.

Model preparation (one of the below):

preconfigured models from HuggingFaces directly in OpenVINO IR format, list of Intel uploaded models available here)

or Python 3.9+ with pip and HuggingFace account to download, convert and quantize manually using Export Models Tool

Model Server deployment: Installed Docker Engine or OVMS binary package according to the baremetal deployment guide

Client: Python for using OpenAI client package and Pillow to save image or simply cURL

Option 1. Downloading the models directly via OVMS#

NOTE: Model downloading feature is described in depth in separate documentation page: Pulling HuggingFaces Models.

This command pulls the OpenVINO/stable-diffusion-v1-5-int8-ov quantized model directly from HuggingFaces and starts the serving. If the model already exists locally, it will skip the downloading and immediately start the serving.

NOTE: Optionally, to only download the model and omit the serving part, use

--pullparameter.

CPU#

Start docker container:

mkdir -p models

docker run -d --rm --user $(id -u):$(id -g) -p 8000:8000 -v $(pwd)/models:/models/:rw \

-e http_proxy=$http_proxy -e https_proxy=$https_proxy -e no_proxy=$no_proxy \

openvino/model_server:latest \

--rest_port 8000 \

--model_repository_path /models/ \

--task image_generation \

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov

Assuming you have unpacked model server package, make sure to:

On Windows: run

setupvarsscriptOn Linux: set

LD_LIBRARY_PATHandPATHenvironment variables

as mentioned in deployment guide, in every new shell that will start OpenVINO Model Server.

mkdir models

ovms --rest_port 8000 ^

--model_repository_path ./models/ ^

--task image_generation ^

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov

GPU#

In case you want to use Intel GPU device to run the generation, add extra docker parameters --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) to docker run command, use the docker image with GPU support. Export the models with precision matching the GPU capacity and adjust pipeline configuration.

It can be applied using the commands below:

mkdir -p models

docker run -d --rm -p 8000:8000 -v $(pwd)/models:/models/:rw \

--user $(id -u):$(id -g) --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) \

-e http_proxy=$http_proxy -e https_proxy=$https_proxy -e no_proxy=$no_proxy \

openvino/model_server:latest-gpu \

--rest_port 8000 \

--model_repository_path /models/ \

--task image_generation \

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov \

--target_device GPU

Depending on how you prepared models in the first step of this demo, they are deployed to either CPU or GPU (it’s defined in config.json). If you run on GPU make sure to have appropriate drivers installed, so the device is accessible for the model server.

mkdir models

ovms --rest_port 8000 ^

--model_repository_path ./models/ ^

--task image_generation ^

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov ^

--target_device GPU

NPU or mixed device#

Image generation endpoints consist of 3 models: vae encoder, denoising and vae decoder. It is possible to select device for each step separately. In this example, we will use NPU for text encoding and denoising, and GPU for vae decoder. This is useful when the model is too large to fit into NPU memory, but the NPU can still be used for the first two steps.

In case you want to use Intel NPU device to run the generation, add extra docker parameters --device /dev/accel --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) to docker run command, use the docker image with NPU support. Export the models with precision matching the NPU capacity and adjust pipeline configuration.

In this specific case, we also need to use --device /dev/dri, because we also use GPU.

NOTE: The NPU device requires the pipeline to be reshaped to static shape, this is why the

--resolutionparameter is used to define the input resolution.

NOTE: In case the model loading phase takes too long, consider caching the model with

--cache_dirparameter, as seen in example below.

It can be applied using the commands below:

mkdir -p models

mkdir -p cache

docker run -d --rm -p 8000:8000 \

-v $(pwd)/models:/models/:rw \

-v $(pwd)/cache:/cache/:rw \

--user $(id -u):$(id -g) --device /dev/accel --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) \

-e http_proxy=$http_proxy -e https_proxy=$https_proxy -e no_proxy=$no_proxy \

openvino/model_server:latest-gpu \

--rest_port 8000 \

--model_repository_path /models/ \

--task image_generation \

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov \

--target_device 'NPU NPU NPU' \

--resolution 512x512 \

--cache_dir /cache

mkdir models

mkdir cache

ovms --rest_port 8000 ^

--model_repository_path ./models/ ^

--task image_generation ^

--source_model OpenVINO/stable-diffusion-v1-5-int8-ov ^

--target_device "NPU NPU NPU" ^

--resolution 512x512 ^

--cache_dir ./cache

Option 2. Using export script to download, convert and quantize then start the serving#

Here, the original models in safetensors format and the tokenizers will be converted to OpenVINO IR format and optionally quantized to desired precision.

Quantization ensures faster initialization time, better performance and lower memory consumption.

Image generation pipeline parameters will be defined inside the graph.pbtxt file.

Download export script, install it’s dependencies and create directory for the models:

curl https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/demos/common/export_models/export_model.py -o export_model.py

pip3 install -r https://raw.githubusercontent.com/openvinotoolkit/model_server/refs/heads/main/demos/common/export_models/requirements.txt

mkdir models

Run export_model.py script to download and quantize the model:

Note: Before downloading the model, access must be requested. Follow the instructions on the HuggingFace model page to request access. When access is granted, create an authentication token in the HuggingFace account -> Settings -> Access Tokens page. Issue the following command and enter the authentication token. Authenticate via

huggingface-cli login.

Note: The users in China need to set environment variable HF_ENDPOINT=”https://hf-mirror.com” before running the export script to connect to the HF Hub.

Note: The

--extra_quantization_paramsparameter is used to pass additional parameters to the optimum-cli. It may be required to set the--group-sizeparameter when quantizing the model when encountering errors like:Channel size 64 should be divisible by size of group 128.

Export model for CPU#

python export_model.py image_generation \

--source_model stable-diffusion-v1-5/stable-diffusion-v1-5 \

--weight-format int8 \

--config_file_path models/config.json \

--model_repository_path models \

--extra_quantization_params "--group-size 64" \

--overwrite_models

Export model for GPU#

python export_model.py image_generation \

--source_model stable-diffusion-v1-5/stable-diffusion-v1-5 \

--weight-format int8 \

--target_device GPU \

--config_file_path models/config.json \

--model_repository_path models \

--extra_quantization_params "--group-size 64" \

--overwrite_models

Export model for NPU or mixed device#

Image generation endpoints consist of 3 models: vae encoder, denoising and vae decoder. It is possible to select device for each step separately. In this example, we will use NPU for all the steps.

NOTE: The NPU device requires the pipeline to be reshaped to static shape, this is why the

--resolutionparameter is used to define the input resolution.

NOTE: In case the model loading phase takes too long, consider caching the model with

--cache_dirparameter, as seen in example below.

python export_model.py image_generation \

--source_model stable-diffusion-v1-5/stable-diffusion-v1-5 \

--weight-format int8 \

--target_device "NPU NPU NPU" \

--resolution 512x512 \

--ov_cache_dir /cache \

--config_file_path models/config.json \

--model_repository_path models \

--overwrite_models

Note: Change the

--weight-formatto quantize the model toint8,fp16orint4precision to reduce memory consumption and improve performance, or omit this parameter to keep the original precision.

Note: You can change the model used in the demo, please verify tested models list.

The default configuration should work in most cases but the parameters can be tuned via export_model.py script arguments. Run the script with --help argument to check available parameters and see the Image Generation calculator documentation to learn more about configuration options.

Server Deployment#

Deploying with Docker

Select deployment option depending on how you prepared models in the previous step.

CPU

Running this command starts the container with CPU only target device:

Start docker container:

docker run -d --rm -p 8000:8000 -v $(pwd)/models:/models:rw \

openvino/model_server:latest \

--rest_port 8000 \

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov \

--model_path /models/stable-diffusion-v1-5/stable-diffusion-v1-5

Assuming you have unpacked model server package, make sure to:

On Windows: run

setupvarsscriptOn Linux: set

LD_LIBRARY_PATHandPATHenvironment variables

as mentioned in deployment guide, in every new shell that will start OpenVINO Model Server.

ovms --rest_port 8000 ^

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov ^

--model_path ./models/stable-diffusion-v1-5/stable-diffusion-v1-5

GPU

In case you want to use GPU device to run the generation, add extra docker parameters --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1)

to docker run command, use the image with GPU support. Export the models with precision matching the GPU capacity and adjust pipeline configuration.

It can be applied using the commands below:

docker run -d --rm -p 8000:8000 -v $(pwd)/models:/models:rw \

--device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) \

openvino/model_server:latest-gpu \

--rest_port 8000 \

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov \

--model_path /models/stable-diffusion-v1-5/stable-diffusion-v1-5

Depending on how you prepared models in the first step of this demo, they are deployed to either CPU or GPU (it’s defined in config.json). If you run on GPU make sure to have appropriate drivers installed, so the device is accessible for the model server.

ovms --rest_port 8000 ^

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov ^

--model_path ./models/stable-diffusion-v1-5/stable-diffusion-v1-5

NPU or mixed device

In case you want to use NPU device to run the generation, add extra docker parameters --device /dev/accel --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1)

to docker run command, use the image with NPU support. Export the models with precision matching the NPU capacity and adjust pipeline configuration.

In this specific case, we also need to use --device /dev/dri, because we also use GPU.

It can be applied using the commands below:

mkdir -p cache

chmod -R 755 cache

docker run -d --rm -p 8000:8000 \

-v $(pwd)/models:/models:rw \

-v $(pwd)/cache:/cache:rw \

-u $(id -u):$(id -g) \

--device /dev/accel --device /dev/dri --group-add=$(stat -c "%g" /dev/dri/render* | head -n 1) \

openvino/model_server:latest-gpu \

--rest_port 8000 \

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov \

--model_path /models/stable-diffusion-v1-5/stable-diffusion-v1-5

Depending on how you prepared models in the first step of this demo, they are deployed to either CPU or NPU (it’s defined in config.json). If you run on NPU make sure to have appropriate drivers installed, so the device is accessible for the model server.

ovms --rest_port 8000 ^

--model_name OpenVINO/stable-diffusion-v1-5-int8-ov ^

--model_path ./models/stable-diffusion-v1-5/stable-diffusion-v1-5

Readiness Check#

Wait for the model to load. You can check the status with a simple command:

curl http://localhost:8000/v1/config

{

"OpenVINO/stable-diffusion-v1-5-int8-ov" :

{

"model_version_status": [

{

"version": "1",

"state": "AVAILABLE",

"status": {

"error_code": "OK",

"error_message": "OK"

}

}

]

}

}

Request Generation#

A single servable exposes following endpoints:

text to image:

images/generationsimage to image:

images/edits

Endpoints unsupported for now:

inpainting:

images/editswithmaskfield

All requests are processed in unary format, with no streaming capabilities.

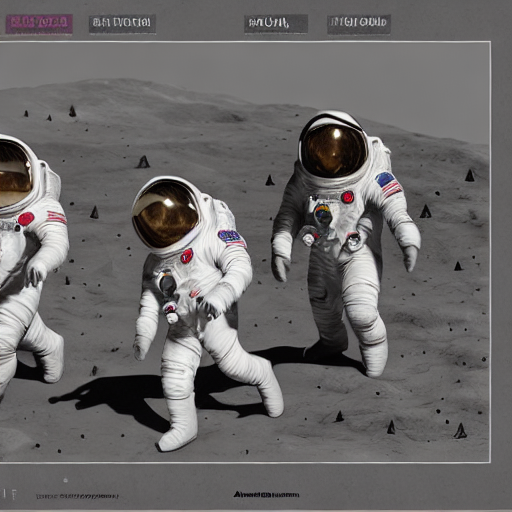

Requesting images/generations API using cURL#

Linux

curl http://localhost:8000/v3/images/generations \

-H "Content-Type: application/json" \

-d '{

"model": "OpenVINO/stable-diffusion-v1-5-int8-ov",

"prompt": "Three astronauts on the moon, cold color palette, muted colors, detailed, 8k",

"rng_seed": 409,

"num_inference_steps": 50,

"size": "512x512"

}'| jq -r '.data[0].b64_json' | base64 --decode > generate_output.png

Windows Powershell

$response = Invoke-WebRequest -Uri "http://localhost:8000/v3/images/generations" `

-Method POST `

-Headers @{ "Content-Type" = "application/json" } `

-Body '{"model": "OpenVINO/stable-diffusion-v1-5-int8-ov", "prompt": "Three astronauts on the moon, cold color palette, muted colors, detailed, 8k", "rng_seed": 409, "num_inference_steps": 50, "size": "512x512"}'

$base64 = ($response.Content | ConvertFrom-Json).data[0].b64_json

[IO.File]::WriteAllBytes('generate_output.png', [Convert]::FromBase64String($base64))

Windows Command Prompt

curl http://localhost:8000/v3/images/generations ^

-H "Content-Type: application/json" ^

-d "{\"model\": \"OpenVINO/stable-diffusion-v1-5-int8-ov\", \"prompt\": \"Three astronauts on the moon, cold color palette, muted colors, detailed, 8k\", \"rng_seed\": 409, \"num_inference_steps\": 50, \"size\": \"512x512\"}"

Expected Response

{

"data": [

{

"b64_json": "..."

}

]

}

The commands will have the generated image saved in generate_output.png.

Requesting image generation with OpenAI Python package#

The image generation/edit endpoints are compatible with OpenAI client:

Install the client library:

pip3 install openai pillow

from openai import OpenAI

import base64

from io import BytesIO

from PIL import Image

client = OpenAI(

base_url="http://localhost:8000/v3",

api_key="unused"

)

response = client.images.generate(

model="OpenVINO/stable-diffusion-v1-5-int8-ov",

prompt="Three astronauts on the moon, cold color palette, muted colors, detailed, 8k",

extra_body={

"rng_seed": 409,

"size": "512x512",

"num_inference_steps": 50

}

)

base64_image = response.data[0].b64_json

image_data = base64.b64decode(base64_image)

image = Image.open(BytesIO(image_data))

image.save('generate_output.png')

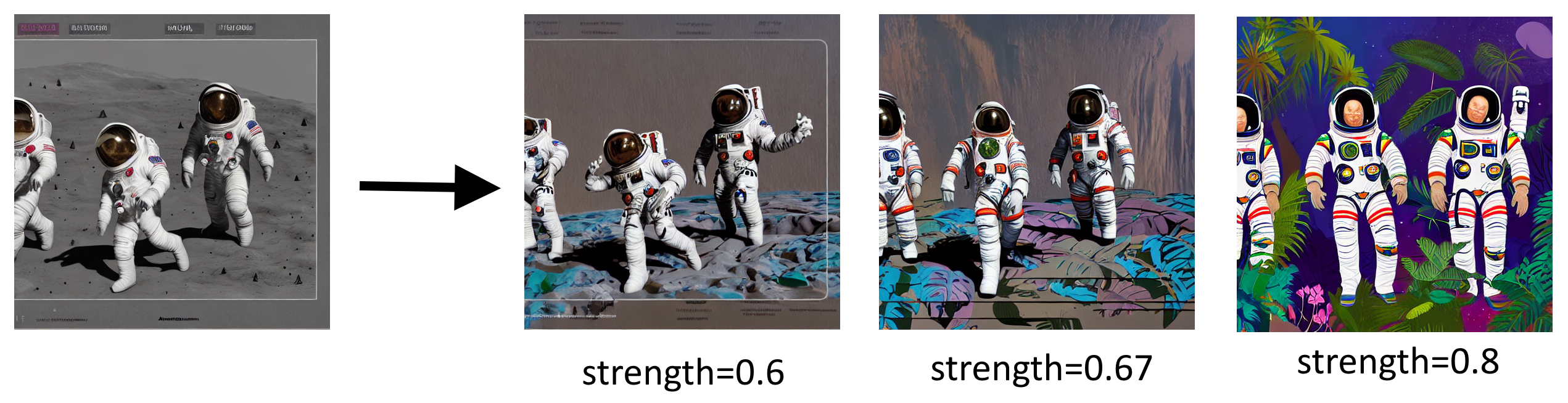

Requesting image edit with OpenAI Python package#

Example changing the previously generated image to: Three astronauts in the jungle, vibrant color palette, live colors, detailed, 8k:

from openai import OpenAI

import base64

from io import BytesIO

from PIL import Image

client = OpenAI(

base_url="http://localhost:8000/v3",

api_key="unused"

)

response = client.images.edit(

model="OpenVINO/stable-diffusion-v1-5-int8-ov",

image=open("generate_output.png", "rb"),

prompt="Three astronauts in the jungle, vibrant color palette, live colors, detailed, 8k",

extra_body={

"rng_seed": 409,

"size": "512x512",

"num_inference_steps": 50,

"strength": 0.67

}

)

base64_image = response.data[0].b64_json

image_data = base64.b64decode(base64_image)

image = Image.open(BytesIO(image_data))

image.save('edit_output.png')

Output file (edit_output.png):

Strength influence on final damage#

Please follow OpenVINO notebook to understand how other parameters affect editing.