Obtaining a Stateful OpenVINO Model#

If the original framework does not offer a dedicated API for working with states, the resulting OpenVINO IR model will not be stateful by default. This means it will not contain either a state or the Assign and ReadValue operations. You can still make such models stateful (see benefits), and you have three ways to do it:

Optimum-Intel - an automated solution applicable to a selection of models (not covered by this article, for a usage guide refer to the LLM Inference with Hugging Face and Optimum Intel article).

MakeStateful transformation - to choose which pairs of Parameter and Result to replace.

LowLatency2 transformation - to detect and replace Parameter and Result pairs connected to hidden and cell state inputs of LSTM/RNN/GRU operations or Loop/TensorIterator operations.

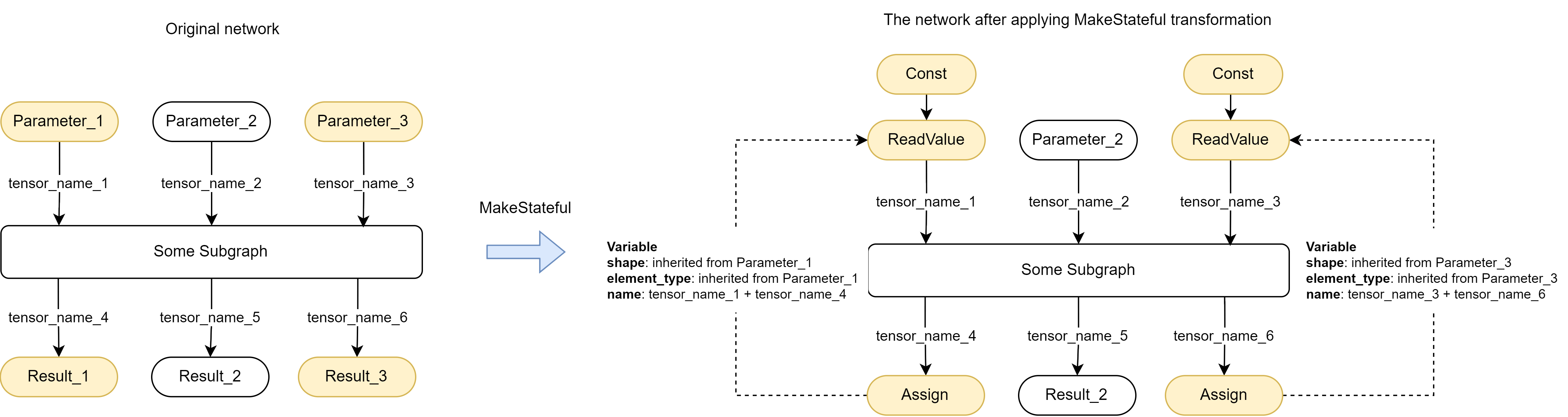

MakeStateful Transformation#

The MakeStateful transformation changes the structure of the model by replacing the user-defined pairs of Parameter and Results with the Assign and ReadValue operations:

Only strict syntax is supported. As shown in the example below, the transformation call must be enclosed in double quotes “MakeStateful[…]”, tensor names - in single quotes without spaces ‘tensor_name_1’.

State naming rule: in most cases, the name of a state is a concatenation of the Parameter/Result tensor names. If there are no tensor names, friendly names are used.

Examples:

core = ov.Core()

ov_model = core.read_model("path_to_the_model")

tensor_names = {"tensor_name_1": "tensor_name_4",

"tensor_name_3": "tensor_name_6"}

manager = Manager()

manager.register_pass(MakeStateful(tensor_names))

manager.run_passes(ov_model)

core = ov.Core()

ov_model = core.read_model("path_to_the_model")

# Parameter_1, Result_1, Parameter_3, Result_3 are

# ops.parameter/ops.result in the ov_model

pairs = ["""(Parameter_1, Result_1), (Parameter_3, Result_3)"""]

manager = Manager()

manager.register_pass(MakeStateful(pairs))

manager.run_passes(ov_model)

ov::Core core;

auto ov_model = core.read_model("path_to_the_model");

std::map<std::string, std::string> tensor_names = {{"tensor_name_1", "tensor_name_4"},

{"tensor_name_3", "tensor_name_6"}};

ov::pass::Manager manager;

manager.register_pass<ov::pass::MakeStateful>(tensor_names);

manager.run_passes(ov_model);

ov::Core core;

auto ov_model = core.read_model("path_to_the_model");

// Parameter_1, Result_1, Parameter_3, Result_3 are shared_ptr<Parameter/Result> in the ov_model

std::vector<std::pair<std::shared_ptr<ov::opset8::Parameter>, std::shared_ptr<ov::opset8::Result>>> pairs

= {/*Parameter_1, Result_1, Parameter_3, Result_3*/};

ov::pass::Manager manager;

manager.register_pass<ov::pass::MakeStateful>(pairs);

manager.run_passes(ov_model);

--input_model <INPUT_MODEL> --transform "MakeStateful[param_res_names={'tensor_name_1':'tensor_name_4','tensor_name_3':'tensor_name_6'}]"

LowLatency2 Transformation#

The LowLatency2 transformation changes the structure of a model containing TensorIterator and Loop by automatically detecting and replacing pairs of Parameter and Results with the Assign and ReadValue operations, as illustrated by the following example:

After applying the transformation, ReadValue operations can receive other operations as input, as shown in the picture above. These inputs should set the initial value for the initialization of ReadValue operations. However, such initialization is not supported in the current State API implementation. Input values are ignored, and the initial values for the ReadValue operations are set to zeros unless the user specifies otherwise via State API.

To apply LowLatency2 Transformation, follow the instruction below:

Get ov::Model, for example:

core = ov.Core() ov_model = core.read_model("path_to_the_model")

ov::Core core; auto ov_model = core.read_model("path_to_the_model");

Change the number of iterations inside TensorIterator/Loop nodes in the model using the Reshape feature.

For example, the sequence_lengths dimension of the model input > 1, it means the TensorIterator layer has the number_of_iterations > 1. You can reshape the model inputs to set the sequence_dimension to exactly 1.

ov_model.reshape({"X": ov.PartialShape([1, 1, 16])})

ov_model->reshape({{"X", ov::Shape({1, 1, 16})}});

Unrolling: If the LowLatency2 transformation is applied to a model containing TensorIterator/Loop nodes with exactly one iteration inside, these nodes are unrolled. Otherwise, the nodes remain as they are. See the picture above for more details.

Apply LowLatency2 transformation.

manager = Manager() manager.register_pass(LowLatency2()) manager.run_passes(ov_model)

ov::pass::Manager manager; manager.register_pass<ov::pass::LowLatency2>(); manager.run_passes(ov_model);

(Optional) Use Const Initializer argument:

By default, the LowLatency2 transformation inserts a constant subgraph of the same shape as the previous input node. The initializing value for ReadValue nodes is set to zero. For more information, see the picture below. You can disable the insertion of this subgraph by setting the

use_const_initializerargument tofalse.manager.register_pass(LowLatency2(False))

manager.register_pass<ov::pass::LowLatency2>(false);

State naming rule: the name of a state is a concatenation of several names: the original TensorIterator operation, the parameter of the body, and an additional suffix

"variable_"+ id (zero-based indexing, new indexing for each TensorIterator). You can use these rules to predict the name of the inserted state after applying the transformation. For example:# Precondition for Model. # TensorIterator and Parameter are created in body of TensorIterator with names tensor_iterator_name = "TI_name" body_parameter_name = "body_parameter_name" idx = "0" # this is a first variable in the model # The State will be named "TI_name/param_name/variable_0" state_name = tensor_iterator_name + "//" + body_parameter_name + "//" + "variable_" + idx #! [ov:get_ov_model] core = ov.Core() ov_model = core.read_model("path_to_the_model") #! [ov:get_ov_model] # reshape input if needed #! [ov:reshape_ov_model] ov_model.reshape({"X": ov.PartialShape([1, 1, 16])}) #! [ov:reshape_ov_model] #! [ov:apply_low_latency_2] manager = Manager() manager.register_pass(LowLatency2()) manager.run_passes(ov_model) #! [ov:apply_low_latency_2] compied_model = core.compile_model(ov_model) # Try to find the Variable by name infer_request = compied_model.create_infer_request() states = infer_request.query_state() for state in states: name = state.get_name() if (name == state_name): # some actions

// Precondition for ov::Model. // TensorIterator and Parameter are created in body of TensorIterator with names std::string tensor_iterator_name = "TI_name"; std::string body_parameter_name = "body_parameter_name"; std::string idx = "0"; // this is a first variable in the network // The State will be named "TI_name/param_name/variable_0" auto state_name = tensor_iterator_name + "//" + body_parameter_name + "//" + "variable_" + idx; //! [ov:get_ov_model] ov::Core core; auto ov_model = core.read_model("path_to_the_model"); //! [ov:get_ov_model] // reshape input if needed //! [ov:reshape_ov_model] ov_model->reshape({{"X", ov::Shape({1, 1, 16})}}); //! [ov:reshape_ov_model] //! [ov:apply_low_latency_2] ov::pass::Manager manager; manager.register_pass<ov::pass::LowLatency2>(); manager.run_passes(ov_model); //! [ov:apply_low_latency_2] auto hd_specific_model = core.compile_model(ov_model); // Try to find the Variable by name auto infer_request = hd_specific_model.create_infer_request(); auto states = infer_request.query_state(); for (auto& state : states) { auto name = state.get_name(); if (name == state_name) { // some actions } }

Use state API. See sections OpenVINO State API, Stateful Model Inference.

The only way to change the number iterations of TensorIterator/Loop layer is to use the Reshape feature. However, some models may be non-reshapable, typically because the value of shapes is hardcoded in a constant somewhere in the model.

In such a case, trim non-reshapable layers via Conversion Parameters:

--inputand--output. For example, check the OpenVINO Model Conversion Tutorial.As for the parameter and the problematic constant in the picture above, it can be trimmed by using the

--input Reshape_layer_namecommand-line option. The problematic constant can be also replaced using OpenVINO, as shown in the following example:# OpenVINO example. How to replace a Constant with hardcoded values of shapes in the model with another one with the new values. # Assume we know which Constant (const_with_hardcoded_shape) prevents the reshape from being applied. # Then we can find this Constant by name in the model and replace it with a new one with the correct shape. core = ov.Core() model = core.read_model("path_to_model"); # Creating the new Constant with a correct shape. # For the example shown in the picture above, the new values of the Constant should be 1, 1, 10 instead of 1, 49, 10 new_const = ops.constant( """value_with_correct_shape, type""") for node in model.get_ops(): # Trying to find the problematic Constant by name. if node.get_friendly_name() != "name_of_non_reshapable_const": continue # Replacing the problematic Constant with a new one. Do this for all the problematic Constants in the model, then # you can apply the reshape feature. replace_node(node, new_const)

// OpenVINO example. How to replace a Constant with hardcoded values of shapes in the network with another one with the new values. // Assume we know which Constant (const_with_hardcoded_shape) prevents the reshape from being applied. // Then we can find this Constant by name on the network and replace it with a new one with the correct shape. ov::Core core; auto model = core.read_model("path_to_model"); // Creating the new Constant with a correct shape. // For the example shown in the picture above, the new values of the Constant should be 1, 1, 10 instead of 1, 49, 10 auto new_const = std::make_shared<ov::opset8::Constant>( /*type, shape, value_with_correct_shape*/ ); for (const auto& node : model->get_ops()) { // Trying to find the problematic Constant by name. if (node->get_friendly_name() == "name_of_non_reshapable_const") { auto const_with_hardcoded_shape = std::dynamic_pointer_cast<ov::opset8::Constant>(node); // Replacing the problematic Constant with a new one. Do this for all the problematic Constants in the network, then // you can apply the reshape feature. ov::replace_node(const_with_hardcoded_shape, new_const); } }

Stateful Model from Scratch#

The main approach to obtaining stateful OpenVINO IR models is converting from other frameworks. Nonetheless, it is possible to create a model from scratch. Check how to do so in the Build OpenVINO Model section.

Here is also an example of how ov::SinkVector is used to create ov::Model. For a

model with states, except inputs and outputs, Assign nodes should also point to Model

to avoid deleting it during graph transformations. You can do it with the constructor, as in

the example, or with the add_sinks(const SinkVector& sinks) method. Also, you can delete

a sink from ov::Model after deleting the node from the graph with the delete_sink() method.

input = ops.parameter([1, 1], dtype=np.float32, name="data")

init_const = ops.constant([[0]], dtype=np.float32)

# Typically ReadValue/Assign operations are presented as pairs in models.

# ReadValue operation reads information from an internal memory buffer, Assign operation writes data to this buffer.

# For each pair, its own Variable object must be created.

# Variable defines name, shape and type of the buffer.

var_info = VariableInfo()

var_info.data_shape = init_const.get_shape()

var_info.data_type = init_const.get_element_type()

var_info.variable_id = "variable0"

variable = Variable(var_info)

# Creating Model

read = ops.read_value(init_const, variable)

add = ops.add(input, read)

assign = ops.assign(add, variable)

result = ops.result(add)

model = ov.Model(results=[result], sinks=[assign], parameters=[input], name="model")

// ...

auto input = std::make_shared<ov::opset8::Parameter>(ov::element::f32, ov::Shape{1, 1});

auto init_const = ov::opset8::Constant::create(ov::element::f32, ov::Shape{1, 1}, {0});

// Typically ReadValue/Assign operations are presented as pairs in models.

// ReadValue operation reads information from an internal memory buffer, Assign operation writes data to this buffer.

// For each pair, its own Variable object must be created.

// Variable defines name, shape and type of the buffer.

const std::string variable_name("variable0");

ov::op::util::VariableInfo var_info = {init_const->get_shape(),

init_const->get_element_type(),

variable_name};

auto variable = std::make_shared<ov::op::util::Variable>(var_info);

// Creating ov::Model

auto read = std::make_shared<ov::opset8::ReadValue>(init_const, variable);

auto add = std::make_shared<ov::opset8::Add>(input, read);

auto save = std::make_shared<ov::opset8::Assign>(add, variable);

auto result = std::make_shared<ov::opset8::Result>(add);

auto model = std::make_shared<ov::Model>(ov::ResultVector({result}),

ov::SinkVector({save}),

ov::ParameterVector({input}));

Note

ONNX and frameworks supported via ONNX format: LSTM, RNN, GRU original layers are converted to the GRU/RNN/LSTM Sequence operations. ONNX Loop layer is converted to the OpenVINO Loop operation.

TensorFlow: BlockLSTM is converted to a TensorIterator operation. The TensorIterator body contains LSTM Cell operation. Modifications such as Peepholes and InputForget are not supported. The While layer is converted to a TensorIterator. The TensorIterator body can contain any supported operations. However, dynamic cases where the count of iterations cannot be calculated during shape inference are not supported.

TensorFlow2: While layer is converted to a Loop operation. The Loop body can contain any supported operations.