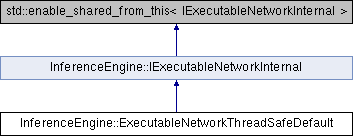

This class provides optimal thread safe default implementation. The class is recommended to be used as a base class for Executable Network impleentation during plugin development. More...

#include <ie_executable_network_thread_safe_default.hpp>

Public Types | |

| typedef std::shared_ptr< ExecutableNetworkThreadSafeDefault > | Ptr |

| A shared pointer to a ExecutableNetworkThreadSafeDefault object. | |

Public Types inherited from InferenceEngine::IExecutableNetworkInternal Public Types inherited from InferenceEngine::IExecutableNetworkInternal | |

| using | Ptr = std::shared_ptr< IExecutableNetworkInternal > |

| A shared pointer to IExecutableNetworkInternal interface. | |

Public Member Functions | |

| ExecutableNetworkThreadSafeDefault (const ITaskExecutor::Ptr &taskExecutor=std::make_shared< CPUStreamsExecutor >(IStreamsExecutor::Config{"Default"}), const ITaskExecutor::Ptr &callbackExecutor=std::make_shared< CPUStreamsExecutor >(IStreamsExecutor::Config{"Callback"})) | |

| Constructs a new instance. More... | |

| IInferRequestInternal::Ptr | CreateInferRequest () override |

| Given optional implementation of creating asynchronous inference request to avoid need for it to be implemented by plugin. More... | |

Public Member Functions inherited from InferenceEngine::IExecutableNetworkInternal Public Member Functions inherited from InferenceEngine::IExecutableNetworkInternal | |

| virtual void | setNetworkInputs (const InputsDataMap &networkInputs) |

| Sets the network inputs info. More... | |

| virtual void | setNetworkOutputs (const OutputsDataMap &networkOutputs) |

| Sets the network outputs data. More... | |

| virtual ConstOutputsDataMap | GetOutputsInfo () const |

| Gets the Executable network output Data node information. The received info is stored in the given Data node. More... | |

| virtual ConstInputsDataMap | GetInputsInfo () const |

| Gets the Executable network input Data node information. The received info is stored in the given InputsDataMap object. More... | |

| virtual void | Export (const std::string &modelFileName) |

| Export the current created executable network so it can be used later in the Import() main API. More... | |

| virtual void | Export (std::ostream &networkModel) |

| Export the current created executable network so it can be used later in the Import() main API. More... | |

| virtual CNNNetwork | GetExecGraphInfo () |

| Get executable graph information from a device. More... | |

| virtual std::vector< std::shared_ptr< IVariableStateInternal > > | QueryState () |

| Queries memory states. More... | |

| virtual void | SetPointerToPlugin (const std::shared_ptr< IInferencePlugin > &plugin) |

| Sets the pointer to plugin internal. More... | |

| virtual void | SetConfig (const std::map< std::string, Parameter > &config) |

| Sets configuration for current executable network. More... | |

| virtual Parameter | GetConfig (const std::string &name) const |

| Gets configuration dedicated to plugin behaviour. More... | |

| virtual Parameter | GetMetric (const std::string &name) const |

| Gets general runtime metric for dedicated hardware. More... | |

| virtual std::shared_ptr< RemoteContext > | GetContext () const |

| Gets the remote context. More... | |

Protected Member Functions | |

| template<typename AsyncInferRequestType = AsyncInferRequestThreadSafeDefault> | |

| IInferRequestInternal::Ptr | CreateAsyncInferRequestFromSync () |

| Creates asyncronous inference request from synchronous request returned by CreateInferRequestImpl. More... | |

Protected Member Functions inherited from InferenceEngine::IExecutableNetworkInternal Protected Member Functions inherited from InferenceEngine::IExecutableNetworkInternal | |

| virtual std::shared_ptr< IInferRequestInternal > | CreateInferRequestImpl (InputsDataMap networkInputs, OutputsDataMap networkOutputs) |

| Creates an inference request internal implementation. More... | |

Protected Attributes | |

| ITaskExecutor::Ptr | _taskExecutor = nullptr |

| Holds a task executor. | |

| ITaskExecutor::Ptr | _callbackExecutor = nullptr |

| Holds a callback executor. | |

Protected Attributes inherited from InferenceEngine::IExecutableNetworkInternal Protected Attributes inherited from InferenceEngine::IExecutableNetworkInternal | |

| InferenceEngine::InputsDataMap | _networkInputs |

| Holds information about network inputs info. | |

| InferenceEngine::OutputsDataMap | _networkOutputs |

| Holds information about network outputs data. | |

| std::shared_ptr< IInferencePlugin > | _plugin |

| A pointer to a IInferencePlugin interface. More... | |

This class provides optimal thread safe default implementation. The class is recommended to be used as a base class for Executable Network impleentation during plugin development.

|

inlineexplicit |

Constructs a new instance.

| [in] | taskExecutor | The task executor used |

| [in] | callbackExecutor | The callback executor |

|

inlineprotected |

Creates asyncronous inference request from synchronous request returned by CreateInferRequestImpl.

| AsyncInferRequestType | A type of asynchronous inference request to use a wrapper for synchronous request |

|

inlineoverridevirtual |

Given optional implementation of creating asynchronous inference request to avoid need for it to be implemented by plugin.

Implements InferenceEngine::IExecutableNetworkInternal.