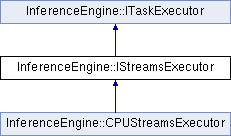

Interface for Streams Task Executor. This executor groups worker threads into so-called streams.

More...

#include <ie_istreams_executor.hpp>

Data Structures | |

| struct | Config |

| Defines IStreamsExecutor configuration. More... | |

Public Types | |

| enum | ThreadBindingType : std::uint8_t { NONE, CORES, NUMA } |

| Defines thread binding type. More... | |

| using | Ptr = std::shared_ptr< IStreamsExecutor > |

Public Types inherited from InferenceEngine::ITaskExecutor Public Types inherited from InferenceEngine::ITaskExecutor | |

| using | Ptr = std::shared_ptr< ITaskExecutor > |

Public Member Functions | |

| ~IStreamsExecutor () override | |

| A virtual destructor. | |

| virtual int | GetStreamId ()=0 |

| Return the index of current stream. More... | |

| virtual int | GetNumaNodeId ()=0 |

| Return the id of current NUMA Node. More... | |

| virtual void | Execute (Task task)=0 |

| Execute the task in the current thread using streams executor configuration and constraints. More... | |

Public Member Functions inherited from InferenceEngine::ITaskExecutor Public Member Functions inherited from InferenceEngine::ITaskExecutor | |

| virtual | ~ITaskExecutor ()=default |

| Destroys the object. | |

| virtual void | run (Task task)=0 |

| Execute InferenceEngine::Task inside task executor context. More... | |

| virtual void | runAndWait (const std::vector< Task > &tasks) |

| Execute all of the tasks and waits for its completion. Default runAndWait() method implementation uses run() pure virtual method and higher level synchronization primitives from STL. The task is wrapped into std::packaged_task which returns std::future. std::packaged_task will call the task and signal to std::future that the task is finished or the exception is thrown from task Than std::future is used to wait for task execution completion and task exception extraction. More... | |

Interface for Streams Task Executor. This executor groups worker threads into so-called streams.

| using InferenceEngine::IStreamsExecutor::Ptr = std::shared_ptr<IStreamsExecutor> |

A shared pointer to IStreamsExecutor interface

| enum InferenceEngine::IStreamsExecutor::ThreadBindingType : std::uint8_t |

|

pure virtual |

Execute the task in the current thread using streams executor configuration and constraints.

| task | A task to start |

Implemented in InferenceEngine::CPUStreamsExecutor.

|

pure virtual |

Return the id of current NUMA Node.

ID of current NUMA Node, or throws exceptions if called not from stream thread Implemented in InferenceEngine::CPUStreamsExecutor.

|

pure virtual |

Return the index of current stream.

Implemented in InferenceEngine::CPUStreamsExecutor.