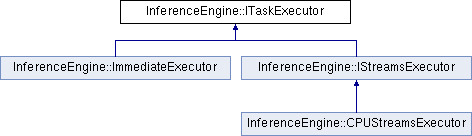

Interface for Task Executor. Inference Engine uses InferenceEngine::ITaskExecutor interface to run all asynchronous internal tasks. Different implementations of task executors can be used for different purposes:

More...

#include <ie_itask_executor.hpp>

Public Types | |

| using | Ptr = std::shared_ptr< ITaskExecutor > |

Public Member Functions | |

| virtual | ~ITaskExecutor ()=default |

| Destroys the object. | |

| virtual void | run (Task task)=0 |

| Execute InferenceEngine::Task inside task executor context. More... | |

| virtual void | runAndWait (const std::vector< Task > &tasks) |

| Execute all of the tasks and waits for its completion. Default runAndWait() method implementation uses run() pure virtual method and higher level synchronization primitives from STL. The task is wrapped into std::packaged_task which returns std::future. std::packaged_task will call the task and signal to std::future that the task is finished or the exception is thrown from task Than std::future is used to wait for task execution completion and task exception extraction. More... | |

Interface for Task Executor. Inference Engine uses InferenceEngine::ITaskExecutor interface to run all asynchronous internal tasks. Different implementations of task executors can be used for different purposes:

InferenceEngine::ITaskExecutor interface restrictions but run tasks in current thread. It is InferenceEngine::ITaskExecutor user responsibility to wait for task execution completion. The c++11 standard way to wait task completion is to use std::packaged_task or std::promise with std::future. Here is an example of how to use std::promise to wait task completion and process task's exceptions:

| using InferenceEngine::ITaskExecutor::Ptr = std::shared_ptr<ITaskExecutor> |

A shared pointer to ITaskExecutor interface

|

pure virtual |

Execute InferenceEngine::Task inside task executor context.

| task | A task to start |

Implemented in InferenceEngine::ImmediateExecutor, and InferenceEngine::CPUStreamsExecutor.

|

virtual |

Execute all of the tasks and waits for its completion. Default runAndWait() method implementation uses run() pure virtual method and higher level synchronization primitives from STL. The task is wrapped into std::packaged_task which returns std::future. std::packaged_task will call the task and signal to std::future that the task is finished or the exception is thrown from task Than std::future is used to wait for task execution completion and task exception extraction.

| tasks | A vector of tasks to execute |