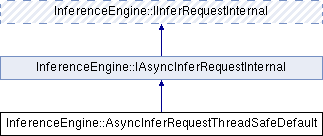

Base class with default implementation of asynchronous multi staged inference request. To customize pipeline stages derived class should change the content of AsyncInferRequestThreadSafeDefault::_pipeline member container. It consists of pairs of tasks and executors which will run the task. The class is recommended to be used by plugins as a base class for asynchronous inference request implementation. More...

#include <ie_infer_async_request_thread_safe_default.hpp>

Public Types | |

| using | Ptr = std::shared_ptr< AsyncInferRequestThreadSafeDefault > |

| A shared pointer to AsyncInferRequestThreadSafeDefault. | |

Public Types inherited from InferenceEngine::IAsyncInferRequestInternal Public Types inherited from InferenceEngine::IAsyncInferRequestInternal | |

| typedef std::shared_ptr< IAsyncInferRequestInternal > | Ptr |

| A shared pointer to IAsyncInferRequestInternal interface. | |

Public Types inherited from InferenceEngine::IInferRequestInternal Public Types inherited from InferenceEngine::IInferRequestInternal | |

| typedef std::shared_ptr< IInferRequestInternal > | Ptr |

| A shared pointer to a IInferRequestInternal interface. | |

Public Member Functions | |

| AsyncInferRequestThreadSafeDefault (const InferRequestInternal::Ptr &request, const ITaskExecutor::Ptr &taskExecutor, const ITaskExecutor::Ptr &callbackExecutor) | |

Wraps a InferRequestInternal::Ptr implementation and constructs a AsyncInferRequestThreadSafeDefault::_pipeline where taskExecutor is used to run InferRequestInternal::Infer asynchronously. More... | |

| ~AsyncInferRequestThreadSafeDefault () | |

| Destroys the object, stops AsyncInferRequestThreadSafeDefault::_pipeline and waits for a finish. | |

| StatusCode | Wait (int64_t millis_timeout) override |

| Waits for completion of all pipeline stages If the pipeline raises an exception it will be rethrown here. More... | |

| void | StartAsync () override |

| Start inference of specified input(s) in asynchronous mode. More... | |

| void | Infer () override |

| Infers specified input(s) in synchronous mode. More... | |

| std::map< std::string, InferenceEngineProfileInfo > | GetPerformanceCounts () const override |

| Queries performance measures per layer to get feedback of what is the most time consuming layer. Note: not all plugins may provide meaningful data. More... | |

| void | SetBlob (const std::string &name, const Blob::Ptr &data) override |

| Set input/output data to infer. More... | |

| void | SetBlob (const std::string &name, const Blob::Ptr &data, const PreProcessInfo &info) override |

| Sets pre-process for input data. More... | |

| Blob::Ptr | GetBlob (const std::string &name) override |

| Get input/output data to infer. More... | |

| const PreProcessInfo & | GetPreProcess (const std::string &name) const override |

| Gets pre-process for input data. More... | |

| void | SetBatch (int batch) override |

| Sets new batch size when dynamic batching is enabled in executable network that created this request. More... | |

| void | GetUserData (void **data) override |

| Get arbitrary data for the request. More... | |

| void | SetUserData (void *data) override |

| Set arbitrary data for the request. More... | |

| void | SetCompletionCallback (IInferRequest::CompletionCallback callback) override |

| Set callback function which will be called on success or failure of asynchronous request. More... | |

| void | SetPointerToPublicInterface (InferenceEngine::IInferRequest::Ptr ptr) |

| Sets the pointer to public interface. More... | |

| std::vector< InferenceEngine::IVariableStateInternal::Ptr > | QueryState () override |

| Queries memory states. More... | |

| void | ThrowIfCanceled () const |

| void | Cancel () override |

| Cancel current inference request execution. | |

Public Member Functions inherited from InferenceEngine::IAsyncInferRequestInternal Public Member Functions inherited from InferenceEngine::IAsyncInferRequestInternal | |

| virtual | ~IAsyncInferRequestInternal ()=default |

| A virtual destructor. | |

Public Member Functions inherited from InferenceEngine::IInferRequestInternal Public Member Functions inherited from InferenceEngine::IInferRequestInternal | |

| virtual | ~IInferRequestInternal ()=default |

| Destroys the object. | |

Protected Types | |

| using | Stage = std::pair< ITaskExecutor::Ptr, Task > |

| Each pipeline stage is a Task that is executed by specified ITaskExecutor implementation. | |

| using | Pipeline = std::vector< Stage > |

| Pipeline is vector of stages. | |

Protected Member Functions | |

| void | CheckState () const |

| Throws exception if inference request is busy or canceled. | |

| void | RunFirstStage (const Pipeline::iterator itBeginStage, const Pipeline::iterator itEndStage, const ITaskExecutor::Ptr callbackExecutor={}) |

| Creates and run the first stage task. If destructor was not called add a new std::future to the AsyncInferRequestThreadSafeDefault::_futures list that would be used to wait AsyncInferRequestThreadSafeDefault::_pipeline finish. More... | |

| void | StopAndWait () |

| Forbids pipeline start and wait for all started pipelines. More... | |

| virtual void | StartAsync_ThreadUnsafe () |

| Starts an asynchronous pipeline thread unsafe. More... | |

| virtual void | Infer_ThreadUnsafe () |

| Performs inference of pipeline in syncronous mode. More... | |

| void | InferUsingAsync () |

| Implements Infer() using StartAsync() and Wait() | |

Protected Attributes | |

| ITaskExecutor::Ptr | _requestExecutor |

| Used to run inference CPU tasks. | |

| ITaskExecutor::Ptr | _callbackExecutor |

| Used to run post inference callback in asynchronous pipline. | |

| ITaskExecutor::Ptr | _syncCallbackExecutor |

| Used to run post inference callback in synchronous pipline. | |

| Pipeline | _pipeline |

| Pipeline variable that should be filled by inherited class. | |

| Pipeline | _syncPipeline |

| Synchronous pipeline variable that should be filled by inherited class. | |

Friends | |

| struct | DisableCallbackGuard |

Base class with default implementation of asynchronous multi staged inference request. To customize pipeline stages derived class should change the content of AsyncInferRequestThreadSafeDefault::_pipeline member container. It consists of pairs of tasks and executors which will run the task. The class is recommended to be used by plugins as a base class for asynchronous inference request implementation.

|

inline |

Wraps a InferRequestInternal::Ptr implementation and constructs a AsyncInferRequestThreadSafeDefault::_pipeline where taskExecutor is used to run InferRequestInternal::Infer asynchronously.

| [in] | request | The synchronous request |

| [in] | taskExecutor | The task executor |

| [in] | callbackExecutor | The callback executor |

|

inlineoverridevirtual |

Get input/output data to infer.

| name | - a name of input or output blob. |

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Queries performance measures per layer to get feedback of what is the most time consuming layer. Note: not all plugins may provide meaningful data.

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Gets pre-process for input data.

| name | Name of input blob. |

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Get arbitrary data for the request.

| data | A pointer to a pointer to arbitrary data |

Implements InferenceEngine::IAsyncInferRequestInternal.

|

inlineoverridevirtual |

Infers specified input(s) in synchronous mode.

Implements InferenceEngine::IInferRequestInternal.

|

inlineprotectedvirtual |

Performs inference of pipeline in syncronous mode.

|

inlineoverridevirtual |

Queries memory states.

Implements InferenceEngine::IInferRequestInternal.

|

inlineprotected |

Creates and run the first stage task. If destructor was not called add a new std::future to the AsyncInferRequestThreadSafeDefault::_futures list that would be used to wait AsyncInferRequestThreadSafeDefault::_pipeline finish.

| [in] | itBeginStage | Iterator to begin of pipeline |

| [in] | itEndStage | End pipeline iterator |

| [in] | callbackExecutor | Final or error stage executor |

|

inlineoverridevirtual |

Sets new batch size when dynamic batching is enabled in executable network that created this request.

| batch | - new batch size to be used by all the following inference calls for this request. |

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Set input/output data to infer.

| name | - a name of input or output blob. |

| data | - a reference to input or output blob. The type of Blob must correspond to the network input precision and size. |

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Sets pre-process for input data.

| name | Name of input blob. |

| data | - a reference to input or output blob. The type of Blob must correspond to the network input precision and size. |

| info | Preprocess info for blob. |

Implements InferenceEngine::IInferRequestInternal.

|

inlineoverridevirtual |

Set callback function which will be called on success or failure of asynchronous request.

| callback | - function to be called with the following description: |

Implements InferenceEngine::IAsyncInferRequestInternal.

|

inline |

Sets the pointer to public interface.

| [in] | ptr | A shared pointer to a public IInferRequest interface. |

|

inlineoverridevirtual |

Set arbitrary data for the request.

| data | A pointer to a pointer to arbitrary data |

Implements InferenceEngine::IAsyncInferRequestInternal.

|

inlineoverridevirtual |

Start inference of specified input(s) in asynchronous mode.

Implements InferenceEngine::IAsyncInferRequestInternal.

|

inlineprotectedvirtual |

Starts an asynchronous pipeline thread unsafe.

|

inlineprotected |

Forbids pipeline start and wait for all started pipelines.

|

inlineoverridevirtual |

Waits for completion of all pipeline stages If the pipeline raises an exception it will be rethrown here.

| millis_timeout | A timeout is ms to wait or special enum value of IInferRequest::WaitMode |

Implements InferenceEngine::IAsyncInferRequestInternal.