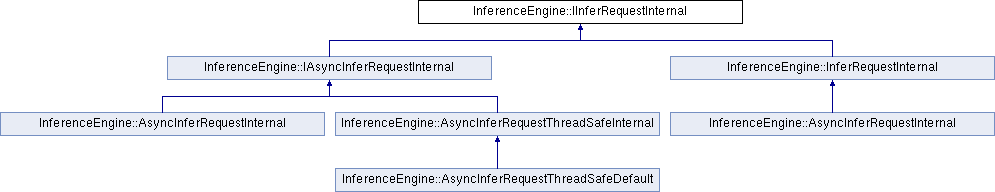

An internal API of synchronous inference request to be implemented by plugin, which is used in InferRequestBase forwarding mechanism. More...

#include <ie_iinfer_request_internal.hpp>

Public Types | |

| typedef std::shared_ptr< IInferRequestInternal > | Ptr |

| A shared pointer to a IInferRequestInternal interface. | |

Public Member Functions | |

| virtual | ~IInferRequestInternal ()=default |

| Destroys the object. | |

| virtual void | Infer ()=0 |

| Infers specified input(s) in synchronous mode. More... | |

| virtual void | GetPerformanceCounts (std::map< std::string, InferenceEngineProfileInfo > &perfMap) const =0 |

| Queries performance measures per layer to get feedback of what is the most time consuming layer. Note: not all plugins may provide meaningful data. More... | |

| virtual void | SetBlob (const char *name, const Blob::Ptr &data)=0 |

| Set input/output data to infer. More... | |

| virtual void | GetBlob (const char *name, Blob::Ptr &data)=0 |

| Get input/output data to infer. More... | |

| virtual void | SetBlob (const char *name, const Blob::Ptr &data, const PreProcessInfo &info)=0 |

| Sets pre-process for input data. More... | |

| virtual void | GetPreProcess (const char *name, const PreProcessInfo **info) const =0 |

| Gets pre-process for input data. More... | |

| virtual void | SetBatch (int batch)=0 |

| Sets new batch size when dynamic batching is enabled in executable network that created this request. More... | |

An internal API of synchronous inference request to be implemented by plugin, which is used in InferRequestBase forwarding mechanism.

|

pure virtual |

Get input/output data to infer.

| name | - a name of input or output blob. |

| data | - a reference to input or output blob. The type of Blob must correspond to the network input precision and size. |

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Queries performance measures per layer to get feedback of what is the most time consuming layer. Note: not all plugins may provide meaningful data.

| perfMap | - a map of layer names to profiling information for that layer. |

Implemented in InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Gets pre-process for input data.

| name | Name of input blob. |

| info | pointer to a pointer to PreProcessInfo structure |

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Infers specified input(s) in synchronous mode.

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Sets new batch size when dynamic batching is enabled in executable network that created this request.

| batch | - new batch size to be used by all the following inference calls for this request. |

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Set input/output data to infer.

| name | - a name of input or output blob. |

| data | - a reference to input or output blob. The type of Blob must correspond to the network input precision and size. |

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.

|

pure virtual |

Sets pre-process for input data.

| name | Name of input blob. |

| data | - a reference to input or output blob. The type of Blob must correspond to the network input precision and size. |

| info | Preprocess info for blob. |

Implemented in InferenceEngine::InferRequestInternal, and InferenceEngine::AsyncInferRequestThreadSafeInternal.