Convert TensorFlow Neural Collaborative Filtering Model¶

This tutorial explains how to convert Neural Collaborative Filtering (NCF) model to Intermediate Representation (IR).

Public TensorFlow NCF model does not contain pre-trained weights. To convert this model to the IR:

Use the instructions from this repository to train the model.

Freeze the inference graph you get on previous step in

model_dirfollowing the instructions from the Freezing Custom Models in Python* section of Converting a TensorFlow* Model. Run the following commands:import tensorflow as tf from tensorflow.python.framework import graph_io sess = tf.compat.v1.Session() saver = tf.compat.v1.train.import_meta_graph("/path/to/model/model.meta") saver.restore(sess, tf.train.latest_checkpoint('/path/to/model/')) frozen = tf.compat.v1.graph_util.convert_variables_to_constants(sess, sess.graph_def, \ ["rating/BiasAdd"]) graph_io.write_graph(frozen, './', 'inference_graph.pb', as_text=False)

where

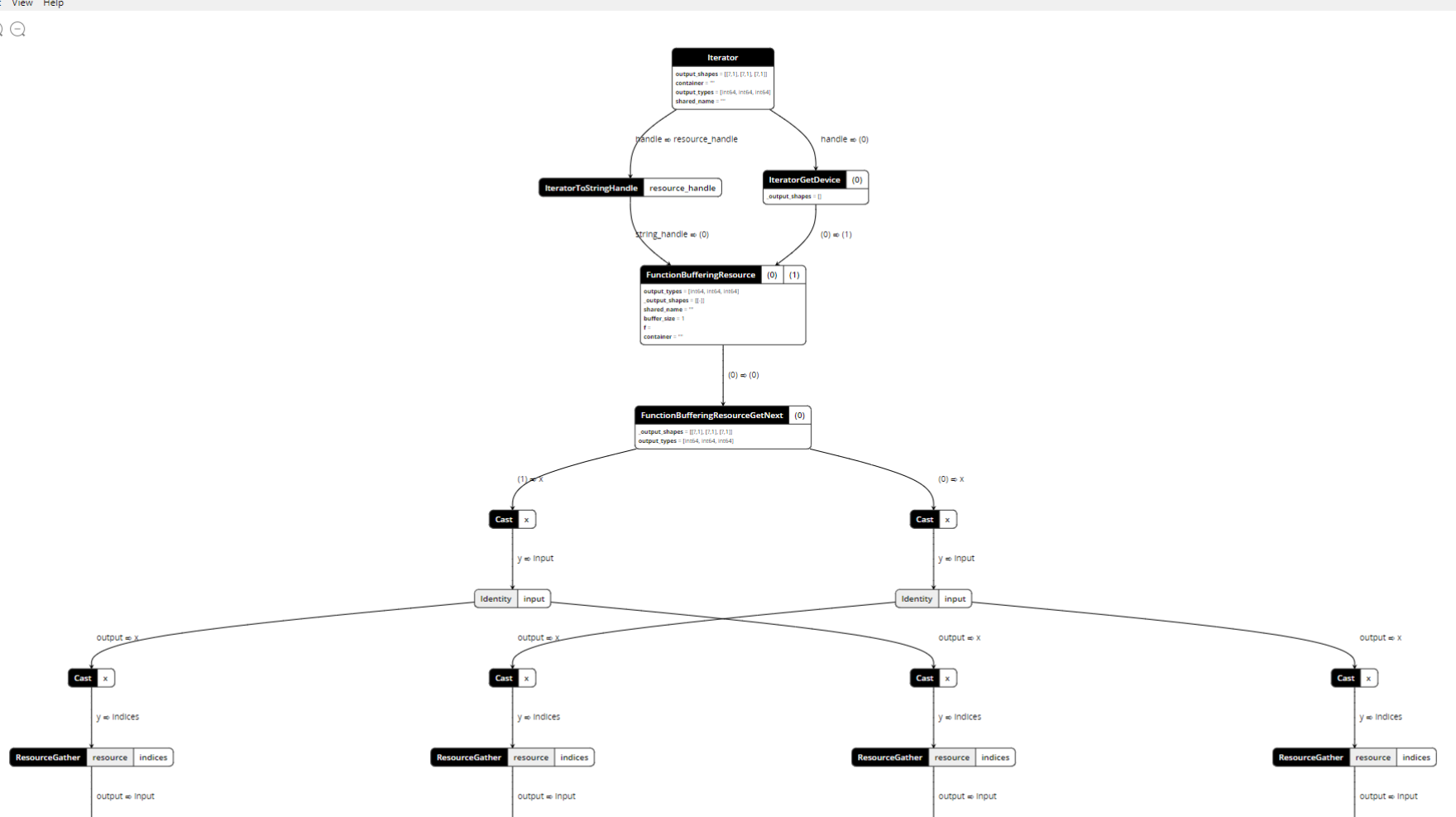

rating/BiasAddis an output node.Convert the model to the IR.If you look at your frozen model, you can see that it has one input that is split into four

ResourceGatherlayers. (Click image to zoom in.)

But as the Model Optimizer does not support such data feeding, you should skip it. Cut the edges incoming in ResourceGather s port 1:

mo --input_model inference_graph.pb \

--input 1:embedding/embedding_lookup,1:embedding_1/embedding_lookup, \

1:embedding_2/embedding_lookup,1:embedding_3/embedding_lookup \

--input_shape [256],[256],[256],[256] \

--output_dir <OUTPUT_MODEL_DIR>In the input_shape parameter, 256 specifies the batch_size for your model.

Alternatively, you can do steps 2 and 3 in one command line:

mo --input_meta_graph /path/to/model/model.meta \

--input 1:embedding/embedding_lookup,1:embedding_1/embedding_lookup, \

1:embedding_2/embedding_lookup,1:embedding_3/embedding_lookup \

--input_shape [256],[256],[256],[256] --output rating/BiasAdd \

--output_dir <OUTPUT_MODEL_DIR>