Accuracy measurement is an important stage of profiling your model in the DL Workbench. Along with performance, accuracy is crucial for assessing neural network quality. DL Workbench has a separate accuracy measurement process as well as it measures accuracy during INT8 calibration using the AccuracyAware optimization method. All parameters are propagated to the Accuracy Checker tool.

To get an adequate accuracy number, you need to correctly configure accuracy settings. Accuracy parameters depend on the model task type. To configure accuracy settings, click the gear sign next to the model name in the Configuration Settings table or go to the settings from the INT8 calibration tab before the optimization process. Once you have specified your parameters, you are directed back to your previous page, either the Configuration Settings table or the INT8 tab.

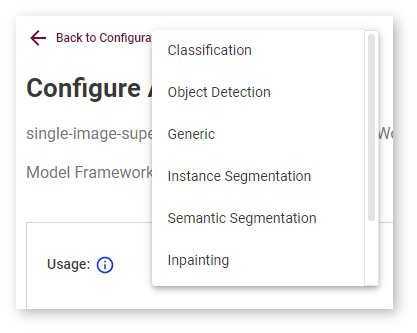

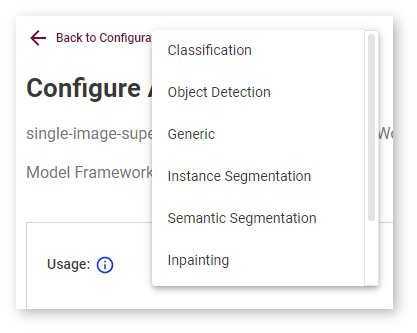

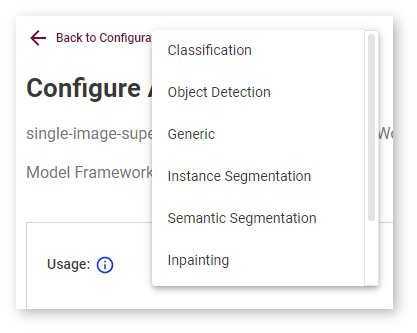

Accuracy settings depend on the model usage. Choose the usage-specific instructions from the list below:

Limitations:

- DL Workbench does not support multi-input models, so make sure to use a single-input model. You can choose and download one of the Intel® Open Model Zoo models directly in the tool.

- YOLO models of versions other than V2 and Tiny V2, like YOLO V3 or YOLO V5, are not supported.

- Accuracy parameters of models from Intel® Open Model Zoo are already configured. You cannot change accuracy configurations for these models.

Possible problems with accuracy:

- AccuracyAware optimization method that is used to measure accuracy is impossible to apply to certain model types, for example, language models. If you see an error message when trying to calibrate such a model, return to the Calibration Options page and select the Default method.

- Accuracy close to zero may appear due to incorrectly configured parameters. Often users make mistakes when setting parameters such as Color space, Normalization scales, or Normalization means in the Conversion Settings before importing the model.

Specify Classification in the drop-down list in the Accuracy Settings:

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV* library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | Accuracy (default) | The rule that is used to compare inference results of a model with reference values |

| Top K | [1; 100] | The number of top predictions among which the correct class is searched for |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Separate background class | Yes No | Select Yes if your model was trained on a dataset with background as an additional class. Usually the index of this class is 0. |

Specify Object Detection in the drop-down list in the Accuracy Settings:

Then specify SSD in the Model Type box that opens below.

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Post-processing parameters define how to process prediction values and/or annotation data after inference and before metric calculation.

| Parameter | Values | Explanation |

|---|---|---|

| Prediction boxes | None ResizeBoxes ResizeBoxes-NMS | Resize boxes or apply Non-Maximum Suppression (NMS) to make sure that detected objects are identified only once. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | mAP COCO-Precision | The rule that is used to compare inference results of a model with reference values |

| Overlap threshold | [0; 1] | COCO precision specific. Minimal value for intersection over union to qualify that a detected bounding box coincides with a ground truth bounding box |

| Integral | Max 11 Point | COCO precision specific. Integral type to calculate average precision |

| Max Detections | Positive Integer | mAP-specific. Maximum number of predicted results per image. If you have more predictions, results with minimum confidence are ignored. |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Separate background class | Yes No | Select Yes if your model was trained on a dataset with background as an additional class. Usually the index of this class is 0. |

| COCO label-to-class mapping | Model labels coincide with original COCO classes Model labels conform to selected original COCO classes | For COCO datasets only. The method used to map class IDs to image indices. |

Specify Object Detection in the drop-down list in the Accuracy Settings:

Then specify YOLO V2 or YOLO Tiny V2 in the Model Type box that opens below.

NOTE: YOLO models of other versions, like YOLO V3 or YOLO V5, are not supported.

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Post-processing parameters define how to process prediction values and/or annotation data after inference and before metric calculation.

| Parameter | Values | Explanation |

|---|---|---|

| Prediction boxes | None ResizeBoxes ResizeBoxes-NMS | Resize boxes or apply Non-Maximum Suppression (NMS) to make sure that detected objects are identified only once. |

| NMS overlap | [0; 1] | Non-maximum suppression overlap threshold to merge detections |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | mAP COCO-Precision | The rule that is used to compare inference results of a model with reference values |

| Overlap threshold | [0; 1] | COCO precision specific. Minimal value for intersection over union to qualify that a detected bounding box coincides with a ground truth bounding box |

| Integral | Max 11 Point | COCO precision specific. Integral type to calculate average precision |

| Max Detections | Positive Integer | mAP-specific. Maximum number of predicted results per image. If you have more predictions, results with minimum confidence are ignored. |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Separate background class | Yes No | Select Yes if your model was trained on a dataset with background as an additional class. Usually the index of this class is 0. |

| COCO label-to-class mapping | Model labels coincide with original COCO classes Model labels conform to selected original COCO classes | For COCO datasets only. The method used to map class IDs to image indices. |

DL Workbench supports only TensorFlow* and ONNX* instance segmentation models. ONNX instance segmentation models have different output layers for masks, boxes, predictions, and confidence scores, while TensorFlow ones have a layer for masks and a layer for boxes, predictions, and confidence scores.

Example of an ONNX instance segmentation model: instance segmentation-security-0010

Example of a TensorFlow instance segmentation model: Mask R-CNN

Specify Instance Segmentation in the drop-down list in the Accuracy Settings:

Adapter parameters define conversion of inference results into a metrics-friendly format.

| Parameter | Values | Explanation |

|---|---|---|

| Input info layer | im_infoim_data | Name of the layer with image metadata, such as height, width, and depth |

| Output layer: Masks | boxes classes raw_masks scores | TensorFlow-specific parameter. Boxes coordinates, predictions, and confidence scores for detected objects |

| Output layer: Boxes | boxes classes raw_masks scores | ONNX-specific parameter. Boxes coordinates for detected objects |

| Output layer: Classes | boxes classes raw_masks scores | ONNX-specific parameter. Predictions for detected objects |

| Output layer: Scores | boxes classes raw_masks scores | ONNX-specific parameter. Confidence score for detected objects |

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | COCO Original Segmentation (default) | The rule that is used to compare inference results of a model with reference values |

| Threshold start | 0.5 | Lower threshold of the intersection over union (IoU) value |

| Threshold step | 0.05 | Increment in the intersection over union (IoU) value |

| Threshold end | 0.95 | Upper threshold of the intersection over union (IoU) value |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Separate background class | Yes No | Select Yes if your model was trained on a dataset with background as an additional class. Usually the index of this class is 0. |

Specify Semantic Segmentation in the drop-down list in the Accuracy Settings:

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Post-processing parameters define how to process prediction values and/or annotation data after inference and before metric calculation.

| Parameter | Values | Explanation |

|---|---|---|

| Segmentation mask encoding | Annotation (default) | Transfer mask colors to class labels using the color mapping from metadata in the annotation of a dataset. |

| Segmentation mask resizing | Prediction (default) | Resize the model output mask to initial image dimensions. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | MEAN IOU (default) | The rule that is used to compare inference results of a model with reference values |

| Argmax | On (default) | Argmax is applied because the model does not perform it internally. Argmaxing is required for accuracy measurements. |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Separate background class | Yes No | Select Yes if your model was trained on a dataset with background as an additional class. Usually the index of this class is 0. |

| COCO label-to-class mapping | Model labels coincide with original COCO classes Model labels conform to selected original COCO classes | For COCO datasets only. The method used to map class IDs to image indices. |

Specify Image Inpainting in the drop-down list in the Accuracy Settings:

Preprocessing configuration parameters define how to process images prior to inference with a model.

Two types of masks can be applied to your image to measure its accuracy: rectangle and free form. Based on a masking type, you have two choose different sets of preprocessing parameters.

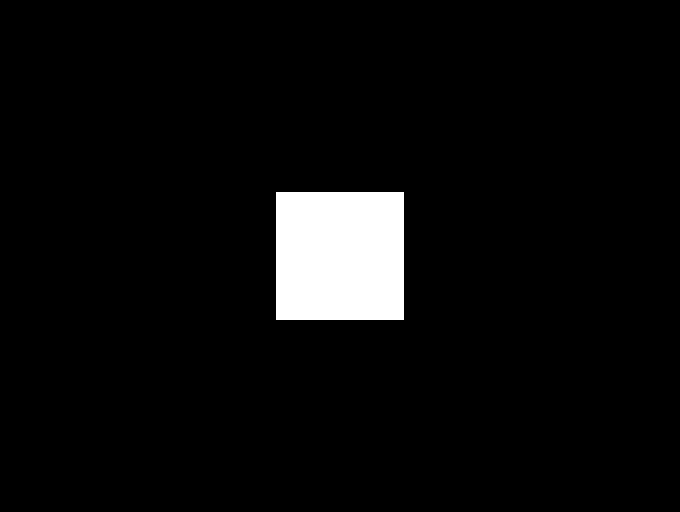

Rectangle masking

The rectangle means that there is a rectangle of specified with and height applied to the middle of the image. Example of the rectangle masking:

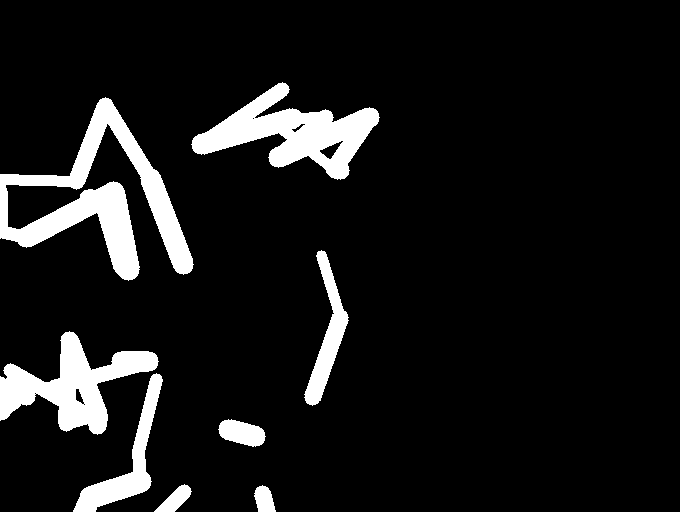

Free-form masking

The free-form masking means separate lines of specified lengths, widths, and vertex numbers. Example of the free-form masking:

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB, BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Mask type | Rectangle Free-form | The shape of the mask cut from an original model |

| Mask width | Positive integer | For rectangle masking. The rectangle width in pixels |

| Mask height | Positive integer | For rectangle masking. The rectangle height in pixels |

| Number of parts | Positive integer | For free-form masking. The number of autogenerated forms which will be cut from an original image |

| Maximum brush width | Positive integer | For free-form masking. The width of a form line in pixels |

| Maximum length | Positive integer | For free-form masking. The maximum length of a form edge in pixels |

| Maximum vertex count | Positive integer greater than 2 | For free-form masking. The maximum number of the vertices of a form |

| Inverse mask | Yes No | If your model uses inverse masking, reset it to regular masking by checking Yes. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | SSIM, PSNR | The rule that is used to compare inference results of a model with reference values |

Specify Super-Resolution in the drop-down list in the Accuracy Settings:

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Annotation conversion parameters define conversion of a dataset annotation.

| Parameter | Values | Explanation |

|---|---|---|

| Two streams | Yes (default) No | Specifies whether the selected model has the second input for the upscaled image. |

Specify Style Transfer in the drop-down list in the Accuracy Settings:

Preprocessing configuration parameters define how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize type | Auto (default) | Automatically scale images to the model input shape using the OpenCV library. |

| Color space | RGB BGR | Describes the color space of images in a dataset. DL Workbench assumes that the IR expects input in the BGR format. If the images are in the RGB format, choose RGB, and the images will be converted to BGR before being fed to a network. If the images are in BGR, choose BGR and no additional color formatting will be applied to the images. |

| Normalization: mean | [0; 255] | Optional. The values to be subtracted from the corresponding image channels. Available for input with three channels only. |

| Normalization: standard deviation | [0; 255] | Optional. The values to divide image channels by. Available for input with three channels only. |

Metric parameters specify rules to test inference results against reference values.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | SSIM PSNR | The rule that is used to compare inference results of a model with reference values |