DL Workbench provides a graphical interface to find the optimal configuration of batches and parallel requests on a certain machine. To learn more about optimal configurations on specific hardware, refer to Deploy and Integrate Performance Criteria into Application.

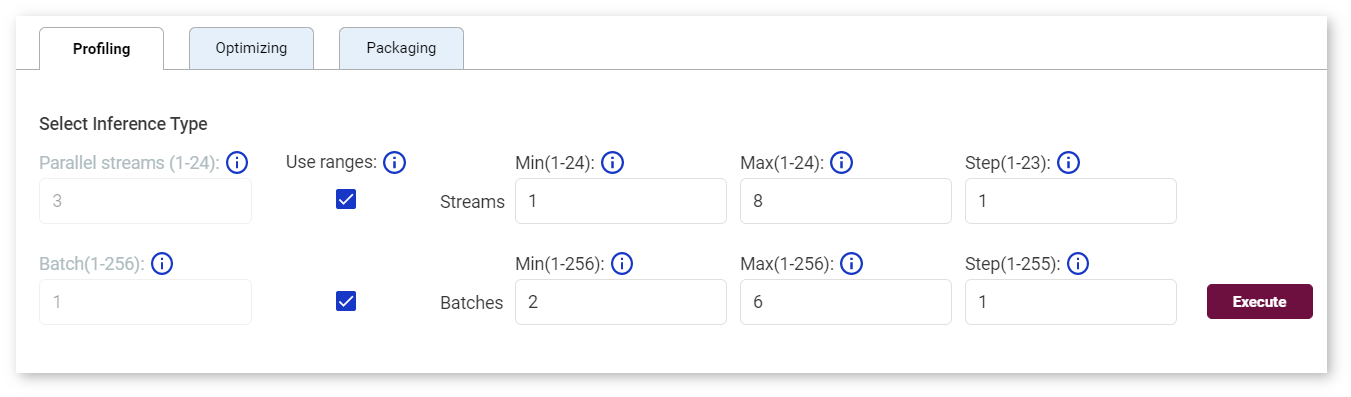

To run a range of inference streams, go to the Profiling Tab of the Selected Configuration section on the Configurations Page. Place check marks in the boxes under the Use Ranges section. Specify minimum and maximum numbers of inferences per an image and a batch, as well as the number of steps to increment numbers of parallel requests or batches. Click Execute:

A step is the increment of parallel inference streams used for testing. For example, if the stream number is set from 1 to 5 with the step 2, the inferences run for 1, 3 and 5 parallel streams. DL Workbench executes every combination of Batch/Inference values from minimum to maximum with the specified step.

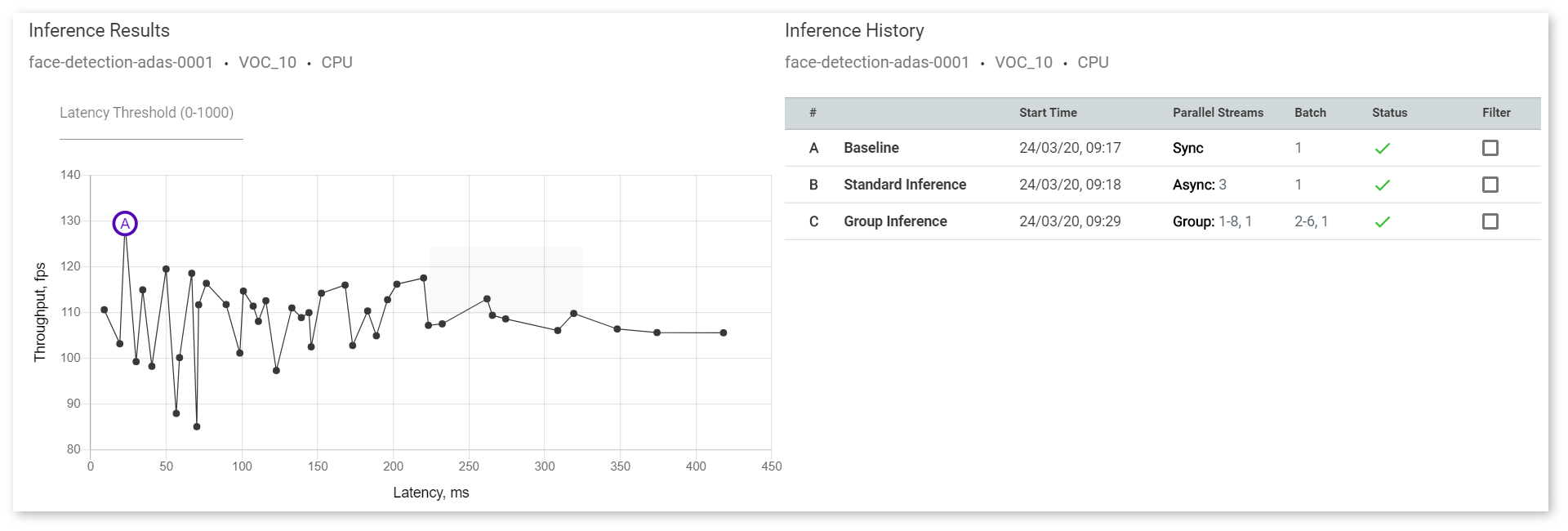

The graph in the Inference Results section shows points that represent each inference with a certain batch/parallel request configuration:

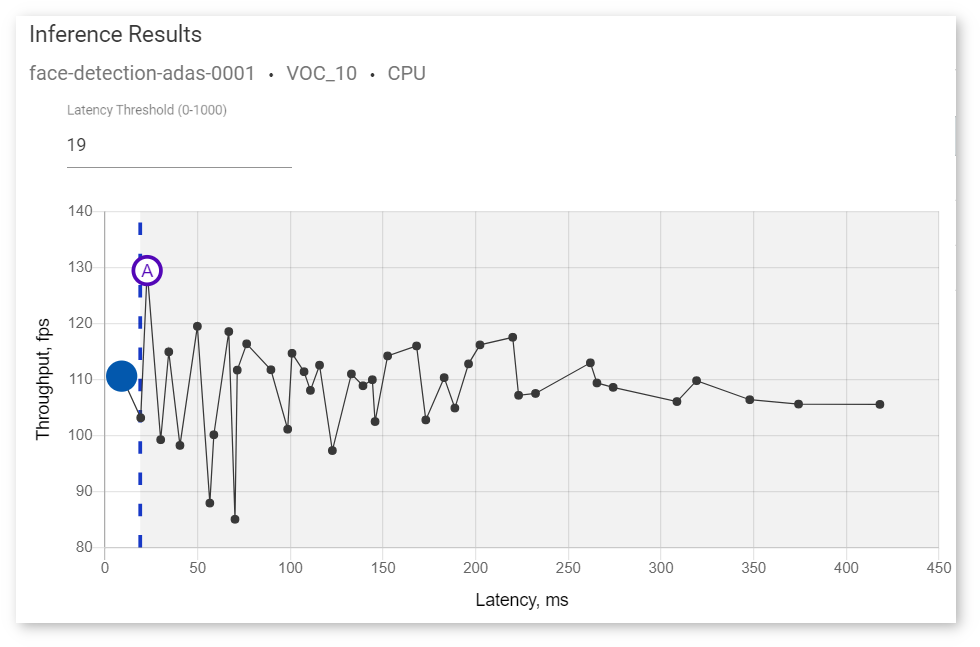

Right above the graph, you can specify maximum latency to find the optimal configuration with the best throughput. The point corresponding to this configuration turns blue:

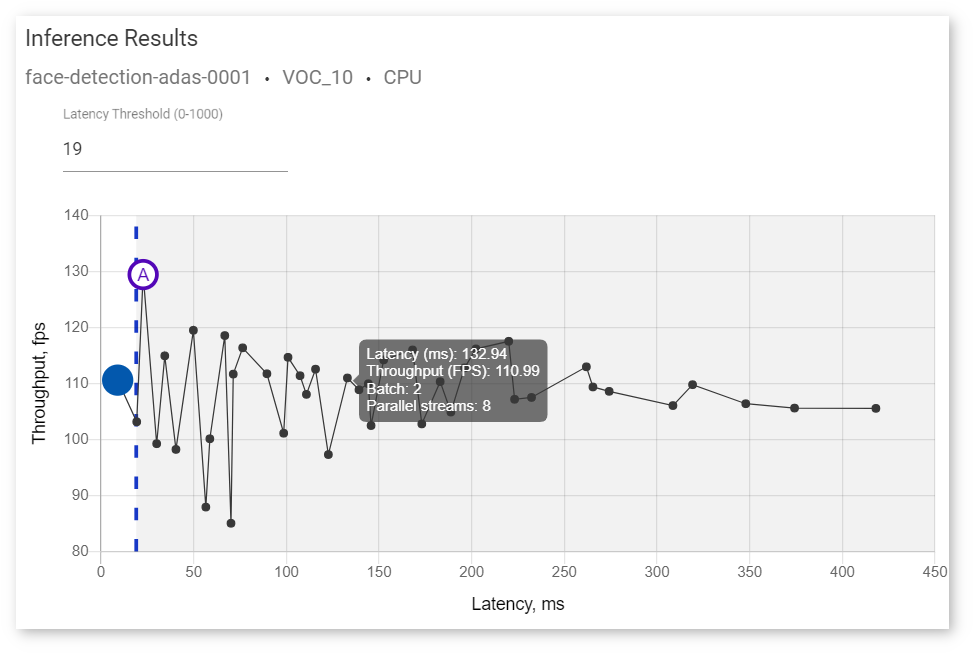

To view information about latency, throughput, batch, and parallel requests of a specific job, hover your cursor over the corresponding point on the graph.

NOTE: For details about inference processes, see the Inference Engine documentation.