You can modify parameters and specify additional parameters to achieve more precise calibration and accuracy results. You are required to specify several parameters for classification and object-detection models.

To configure accuracy settings, click on the gear sign next to the model name in the Projects table or go to the settings from the INT8 calibration tab before the optimization process. Once you have specified your parameters, you are directed back to your previous window, either the Projects table or the INT8 tab.

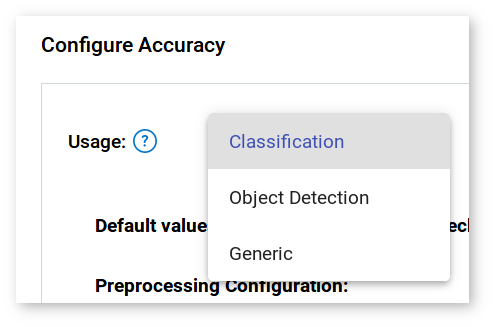

Accuracy settings depend on the model usage. The default usage of a model is Generic. Specify Classification, Object-Detection or Generic usage in the drop-down list in the Accuracy Settings. If you choose Object Detection, specify SSD or YOLO model type. The latter requires additional type specification between V2 and Tiny V2:

Refer to the table below to see available parameters for each usage type:

| Usage | Configuration Parameters |

|---|---|

| Classification | Preprocessing configuration: Separate background class, Normalization Metric configuration: Metric, Top K |

| Object-Detection SSD | Preprocessing configuration: Resize type, Color space, Separate background class, Normalization Post-processing configuration: Prediction boxes Metric configuration: Metric (Auto), Overlap threshold, Integral |

| Object-Detection YOLO V2 and YOLO Tiny V2 | Preprocessing configuration: Resize type, Color space, Separate background class, Normalization Post-processing configuration: Prediction boxes, NMS overlap Metric configuration: Metric (Auto), Overlap threshold, Integral |

| Generic | No parameters required |

Do not change optional settings unless you are well aware of the impact they have. For more details on parameter setting for calibration and accuracy checking, refer to the command-line documentation.

Preprocessing Configuration

Defines how to process images prior to inference with a model.

| Parameter | Values | Explanation |

|---|---|---|

| Resize Type | Auto | Resize images to the model input dimensions |

| Color Space | RGB BGR | Transform image color space from RGB to BGR or back |

| Separate Background Class | Yes No | Insert background label as an additional label for all images |

| Normalization: Mean | [0; 256] | The values to be subtracted from the corresponding image channels |

| Normalization: Standard Deviation | [0; 256] | The values to divide image channels by |

Post-Processing Configuration

Defines how to process images after inference with a model. Post-processing also provides prediction values and/or annotation data after inference and before metric calculation.

| Parameter | Values | Explanation |

|---|---|---|

| Prediction Boxes | None ResizeBoxes ResizeBoxes NMS | Resize images or set Non-Maximum Suppression (NMS) to make sure that detected objects are identified only once |

| NMS Overlap | [0; 1] | Non-maximum suppression overlap threshold to merge detections |

Metric Configuration

Specifies a metric for post-inference measurements.

| Parameter | Values | Explanation |

|---|---|---|

| Metric | mAP COCO Precision | The unit of measurement applied to evaluate performance of a model |

| Overlap Threshold | [0; 1] | For ImageNet and Pascal VOC datasets only. Minimal value for intersection over union to qualify that a bounding box of a prediction as true positive |

| Integral | Max 11 Point | For ImageNet and Pascal VOC datasets only. Integral type to calculate average precision |

| Max detections | Positive numbers | For COCO datasets only. Maximum number of predicted results per image. If you have more predictions, results with minimal confidence are ignored. |