This interface extends regular plugin interface for heterogeneous case. Not all plugins implements it. The main purpose of this interface - to register loaders and have an ability to get default settings for affinity on certain devices. More...

#include <ie_ihetero_plugin.hpp>

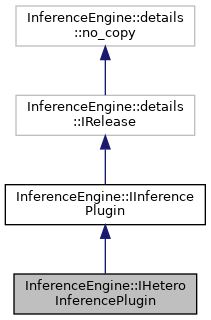

Inheritance diagram for InferenceEngine::IHeteroInferencePlugin:

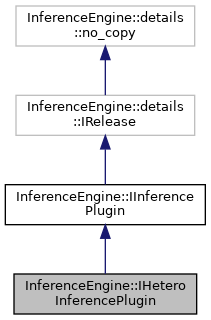

Collaboration diagram for InferenceEngine::IHeteroInferencePlugin:

Public Member Functions | |

| virtual void | SetDeviceLoader (const std::string &device, IHeteroDeviceLoader::Ptr loader) noexcept=0 |

| virtual StatusCode | SetAffinity (ICNNNetwork &network, const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| The main goal of this function to set affinity according to the options set for the plugin implementing IHeteroInferencePlugin. This function works only if all affinity in the network are empty. More... |

|

Public Member Functions inherited from InferenceEngine::IInferencePlugin Public Member Functions inherited from InferenceEngine::IInferencePlugin

| |

| virtual void | GetVersion (const Version *&versionInfo) noexcept=0 |

| Returns plugin version information. More... |

|

| virtual void | SetLogCallback (IErrorListener &listener) noexcept=0 |

| Sets logging callback Logging is used to track what is going on inside. More... |

|

| virtual StatusCode | LoadNetwork (ICNNNetwork &network, ResponseDesc *resp) noexcept=0 |

| Loads a pre-built network with weights to the engine. In case of success the plugin will be ready to infer. More... |

|

| virtual StatusCode | LoadNetwork (IExecutableNetwork::Ptr &ret, ICNNNetwork &network, const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Creates an executable network from a network object. User can create as many networks as they need and use them simultaneously (up to the limitation of the hardware resources) More... |

|

| virtual StatusCode | ImportNetwork (IExecutableNetwork::Ptr &ret, const std::string &modelFileName, const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Creates an executable network from a previously exported network. More... |

|

| virtual StatusCode | Infer (const Blob &input, Blob &result, ResponseDesc *resp) noexcept=0 |

| Infers an image(s). Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob. More... |

|

| virtual StatusCode | Infer (const BlobMap &input, BlobMap &result, ResponseDesc *resp) noexcept=0 |

| Infers tensors. Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob. More... |

|

| virtual StatusCode | GetPerformanceCounts (std::map< std::string, InferenceEngineProfileInfo > &perfMap, ResponseDesc *resp) const noexcept=0 |

| Queries performance measures per layer to get feedback of what is the most time consuming layer Note: not all plugins provide meaningful data. More... |

|

| virtual StatusCode | AddExtension (InferenceEngine::IExtensionPtr extension, InferenceEngine::ResponseDesc *resp) noexcept=0 |

| Registers extension within the plugin. More... |

|

| virtual StatusCode | SetConfig (const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Sets configuration for plugin, acceptable keys can be found in ie_plugin_config.hpp. More... |

|

| virtual void | QueryNetwork (const ICNNNetwork &network, QueryNetworkResult &res) const noexcept |

| Query plugin if it supports specified network. More... |

|

| virtual void | QueryNetwork (const ICNNNetwork &network, const std::map< std::string, std::string > &config, QueryNetworkResult &res) const noexcept |

| Query plugin if it supports specified network with specified configuration. More... |

|

Detailed Description

This interface extends regular plugin interface for heterogeneous case. Not all plugins implements it. The main purpose of this interface - to register loaders and have an ability to get default settings for affinity on certain devices.

- Deprecated:

- Use InferenceEngine::Core with HETERO mode in LoadNetwork, QueryNetwork, etc

Member Function Documentation

§ SetAffinity()

|

pure virtualnoexcept |

The main goal of this function to set affinity according to the options set for the plugin implementing IHeteroInferencePlugin. This function works only if all affinity in the network are empty.

- Deprecated:

- Use InferenceEngine::Core::QueryNetwork with HETERO device and QueryNetworkResult::supportedLayersMap to set affinities to a network

- Parameters

-

network Network object acquired from CNNNetReader config Map of configuration settings resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ SetDeviceLoader()

|

pure virtualnoexcept |

- Deprecated:

- Use InferenceEngine::Core to work with HETERO device Registers device loader for the device

- Parameters

-

device - the device name being used in CNNNLayer::affinity loader - helper class allowing to analyze if layers are supported and allow to load network to the plugin being defined in the IHeteroDeviceLoader implementation

The documentation for this class was generated from the following file: