Inference Results

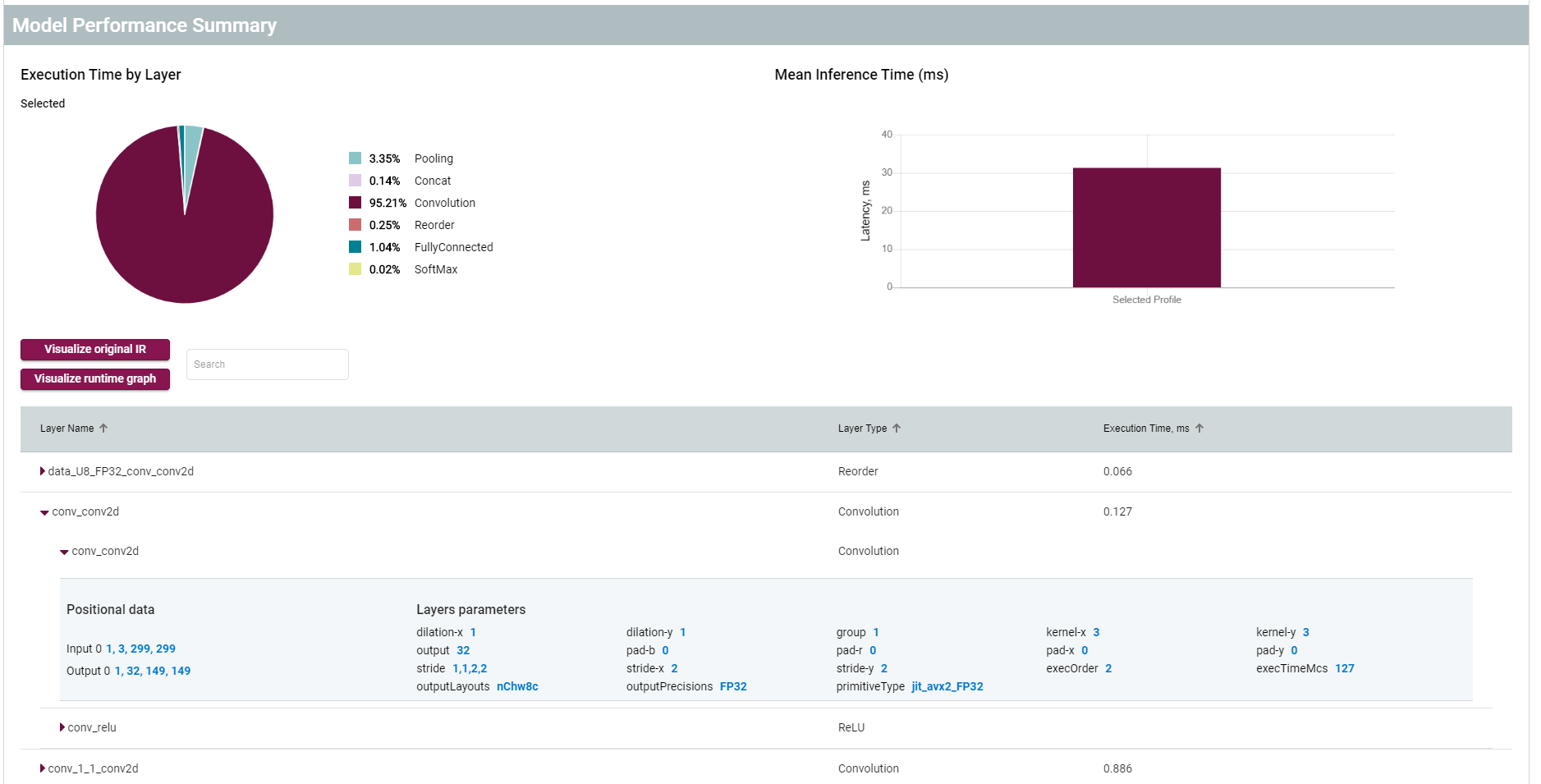

Once an initial inference has been run with a model sample data set and target, you can view performance results on the project Dashboard.

- The Execution Time by Layer pie chart represents information of each layer group execution time. A tooltip with information on execution time in milliseconds appears when you hover your mouse over the chart.

- The Mean Profile Time bar chart represents mean time of inference on a selected dataset. If a user specified 10 runs on each image in a 100-images dataset and the total execution time is 10000 ms, the mean inference time is 10000 ms : 100 images: 10 runs = 10 ms. Detailed information appears when you hover your mouse over the chart, including throughput, latency, total execution time, number of parallel requests, and batch.

- The Layer Name data table shows each layer of a model. If a layer was fused, a corresponding row can be further expanded to show the layer parameters. Go to Visualize Model for details.

- The Visualize original IR and Visualize runtime graph are visualization tools. Go to Visualize Model for details.

All of these components provide visual representation of a model performance on a selected dataset, and help find potential bottlenecks and areas for improvement.

Model Analyzer

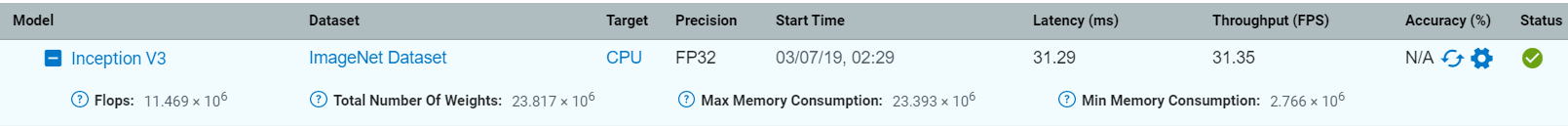

The Model Analyzer is used for generating estimated performance information on neural networks. The tool analyzes of the following characteristics:

| Characteristic | Explanation |

|---|---|

| Computational Complexity | Measured in GFLOPs. This parameter represents a number of floating point operations required to infer a model. |

| Number of Parameters | Measured in millions. This parameter represents a total number of weights in a model. |

| Minimum Memory Consumption, Maximum Memory Consumption |

Measured in millions of units. A unit depends on the precision of model weights. For example, for FP32 model these parameters must be multiplied by 4 bytes. |

Model analysis data is collected when the model is imported. All parameters depend on the size of a batch. Currently, information is gained on the default model batch.

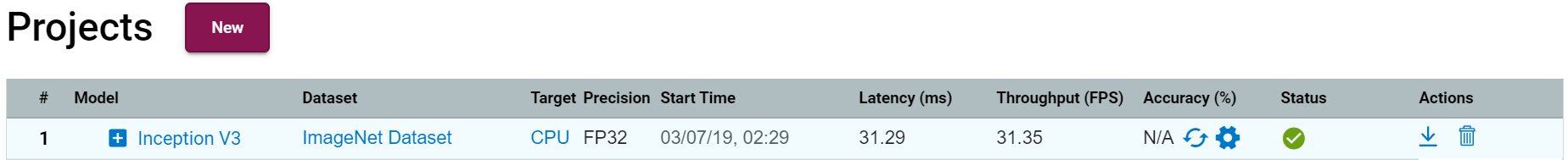

To view model analysis data, click on the plus button next to a model name on the Projects page.

A table with characteristics of a model appear: