After you chose a model and a dataset, you can run an initial inference on the available Intel architecture, such as CPU, GPU or VPU. Use the inference statistics to identify model performance and optimize as necessary.

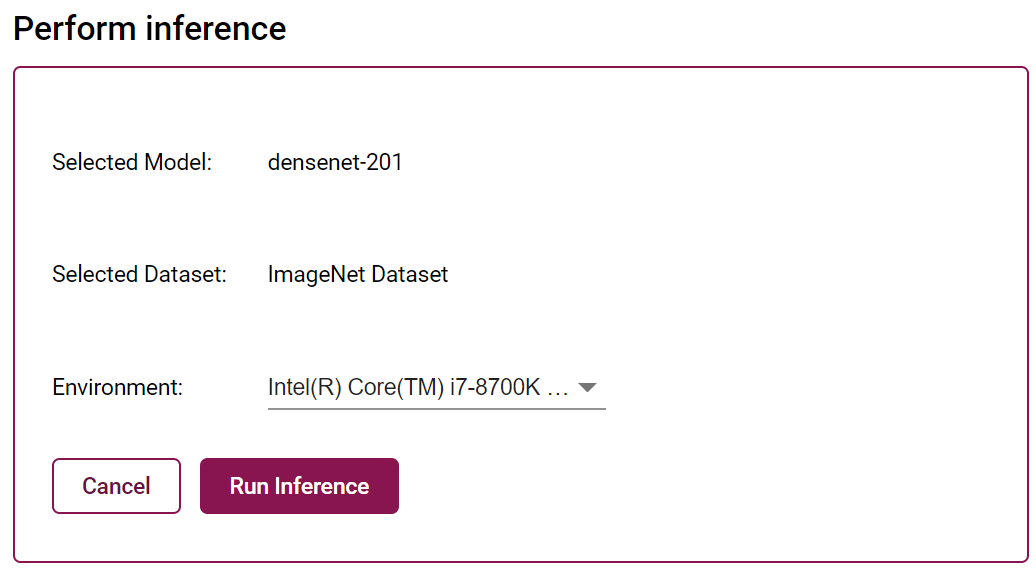

The Run Inference page shows you the Perform Inference form with inference parameters:

| Selected Model | Shows the model you selected for the configuration |

| Selected Dataset | Shows the dataset you selected for the configuration |

| Environment | Shows detected hardware capable of running inference |

After specifying all inference parameters, click the Run Inference button to start the inference. The Projects page opens automatically. Find your inference job in the list of running and complete jobs. The Status column in the table shows the progress bar and status. To cancel the inference process, press the Cancel icon next to the job name.

When the inference completes, the job shows the Complete status. You can select the inference, visualize the statistics, experiment with model optimization and inference options to profile the configuration.