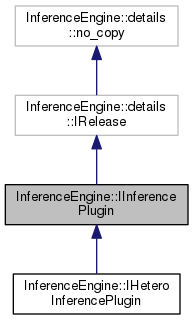

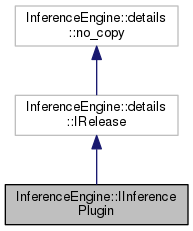

This class is a main plugin interface. More...

#include <ie_plugin.hpp>

Public Member Functions | |

| virtual void | GetVersion (const Version *&versionInfo) noexcept=0 |

| Returns plugin version information. More... |

|

| virtual void | SetLogCallback (IErrorListener &listener) noexcept=0 |

| Sets logging callback Logging is used to track what is going on inside. More... |

|

| virtual StatusCode | LoadNetwork (ICNNNetwork &network, ResponseDesc *resp) noexcept=0 |

| Loads a pre-built network with weights to the engine. In case of success the plugin will be ready to infer. More... |

|

| virtual StatusCode | LoadNetwork (IExecutableNetwork::Ptr &ret, ICNNNetwork &network, const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Creates an executable network from a network object. User can create as many networks as they need and use them simultaneously (up to the limitation of the hardware resources) More... |

|

| virtual StatusCode | ImportNetwork (IExecutableNetwork::Ptr &ret, const std::string &modelFileName, const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Creates an executable network from a previously exported network. More... |

|

| virtual StatusCode | Infer (const Blob &input, Blob &result, ResponseDesc *resp) noexcept=0 |

| Infers an image(s). Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob. More... |

|

| virtual StatusCode | Infer (const BlobMap &input, BlobMap &result, ResponseDesc *resp) noexcept=0 |

| Infers tensors. Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob. More... |

|

| virtual StatusCode | GetPerformanceCounts (std::map< std::string, InferenceEngineProfileInfo > &perfMap, ResponseDesc *resp) const noexcept=0 |

| Queries performance measures per layer to get feedback of what is the most time consuming layer Note: not all plugins provide meaningful data. More... |

|

| virtual StatusCode | AddExtension (InferenceEngine::IExtensionPtr extension, InferenceEngine::ResponseDesc *resp) noexcept=0 |

| Registers extension within the plugin. More... |

|

| virtual StatusCode | SetConfig (const std::map< std::string, std::string > &config, ResponseDesc *resp) noexcept=0 |

| Sets configuration for plugin, acceptable keys can be found in ie_plugin_config.hpp. More... |

|

| virtual void | QueryNetwork (const ICNNNetwork &, QueryNetworkResult &res) const noexcept |

| Query plugin if it supports specified network. More... |

|

| virtual void | QueryNetwork (const ICNNNetwork &, const std::map< std::string, std::string > &, QueryNetworkResult &res) const noexcept |

| Query plugin if it supports specified network with specified configuration. More... |

|

Detailed Description

This class is a main plugin interface.

Member Function Documentation

§ AddExtension()

|

pure virtualnoexcept |

Registers extension within the plugin.

- Parameters

-

extension Pointer to already loaded extension resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ GetPerformanceCounts()

|

pure virtualnoexcept |

Queries performance measures per layer to get feedback of what is the most time consuming layer Note: not all plugins provide meaningful data.

- Deprecated:

- Uses IInferRequest to get performance measures

- Parameters

-

perfMap Map of layer names to profiling information for that layer resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ GetVersion()

|

pure virtualnoexcept |

Returns plugin version information.

- Parameters

-

versionInfo Pointer to version info. Is set by plugin

§ ImportNetwork()

|

pure virtualnoexcept |

Creates an executable network from a previously exported network.

- Parameters

-

ret Reference to a shared ptr of the returned network interface modelFileName Path to the location of the exported file config Map of pairs: (config parameter name, config parameter value) relevant only for this load operation* resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ Infer() [1/2]

|

pure virtualnoexcept |

Infers an image(s). Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob.

- Deprecated:

- Uses Infer() working with multiple inputs and outputs

- Parameters

-

input Any TBlob<> object that contains the data to infer. The type of TBlob must match the network input precision and size. result Related TBlob<> object that contains the result of the inference action, typically this is a float blob. The blob does not need to be allocated or initialized, the engine allocates the relevant data. resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ Infer() [2/2]

|

pure virtualnoexcept |

Infers tensors. Input and output dimensions depend on the topology. As an example for classification topologies use a 4D Blob as input (batch, channels, width, height) and get a 1D blob as output (scoring probability vector). To Infer a batch, use a 4D Blob as input and get a 2D blob as output in both cases the method will allocate the resulted blob.

- Deprecated:

- Loads IExecutableNetwork to create IInferRequest.

- Parameters

-

input Map of input blobs accessed by input names result Map of output blobs accessed by output names resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ LoadNetwork() [1/2]

|

pure virtualnoexcept |

Loads a pre-built network with weights to the engine. In case of success the plugin will be ready to infer.

- Deprecated:

- use LoadNetwork with four parameters (executable network, cnn network, config, response)

- Parameters

-

network Network object acquired from CNNNetReader resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ LoadNetwork() [2/2]

|

pure virtualnoexcept |

Creates an executable network from a network object. User can create as many networks as they need and use them simultaneously (up to the limitation of the hardware resources)

- Parameters

-

ret Reference to a shared ptr of the returned network interface network Network object acquired from CNNNetReader config Map of pairs: (config parameter name, config parameter value) relevant only for this load operation resp Pointer to the response message that holds a description of an error if any occurred

- Returns

- Status code of the operation. OK if succeeded

§ QueryNetwork() [1/2]

|

inlinevirtualnoexcept |

Query plugin if it supports specified network.

Use the version with config parameter

- Parameters

-

network Network object to query resp Pointer to the response message that holds a description of an error if any occurred

§ QueryNetwork() [2/2]

|

inlinevirtualnoexcept |

Query plugin if it supports specified network with specified configuration.

- Parameters

-

network Network object to query config Map of pairs: (config parameter name, config parameter value) resp Pointer to the response message that holds a description of an error if any occurred

§ SetConfig()

|

pure virtualnoexcept |

Sets configuration for plugin, acceptable keys can be found in ie_plugin_config.hpp.

- Parameters

-

config Map of pairs: (config parameter name, config parameter value) resp Pointer to the response message that holds a description of an error if any occurred

§ SetLogCallback()

|

pure virtualnoexcept |

Sets logging callback Logging is used to track what is going on inside.

- Parameters

-

listener Logging sink

The documentation for this class was generated from the following file:

- tmp_docs/inference-engine/include/ie_plugin.hpp